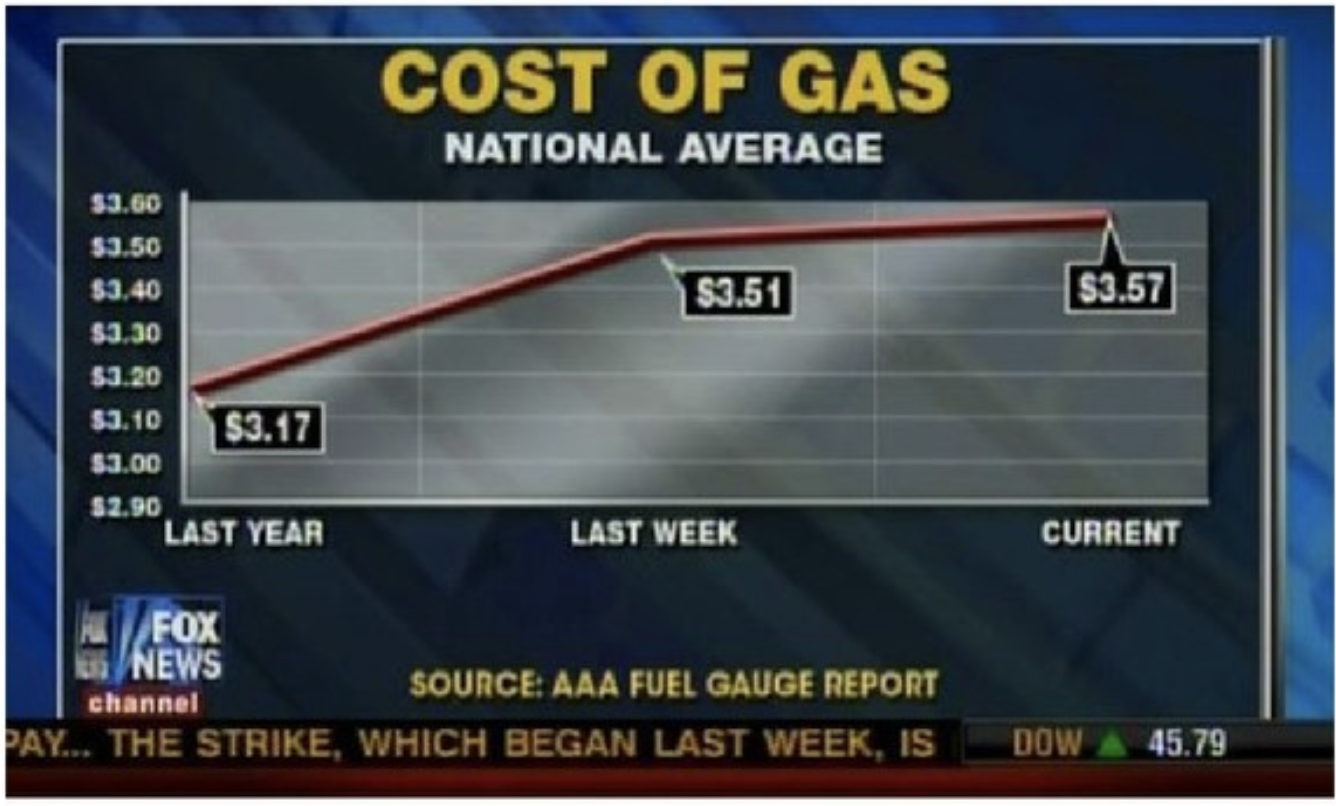

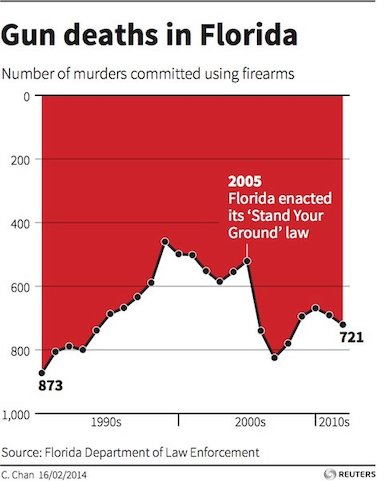

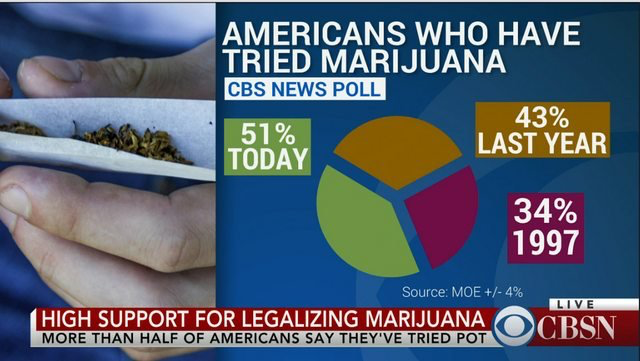

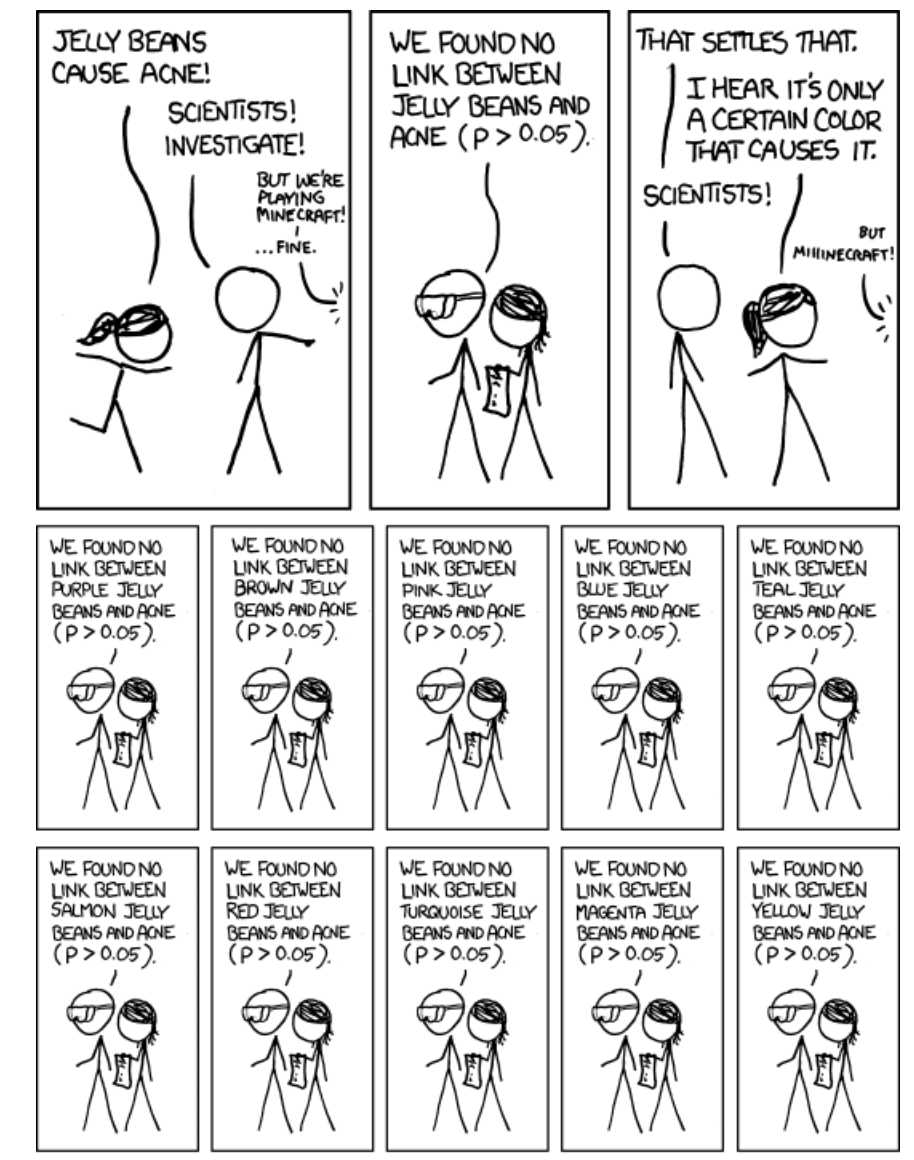

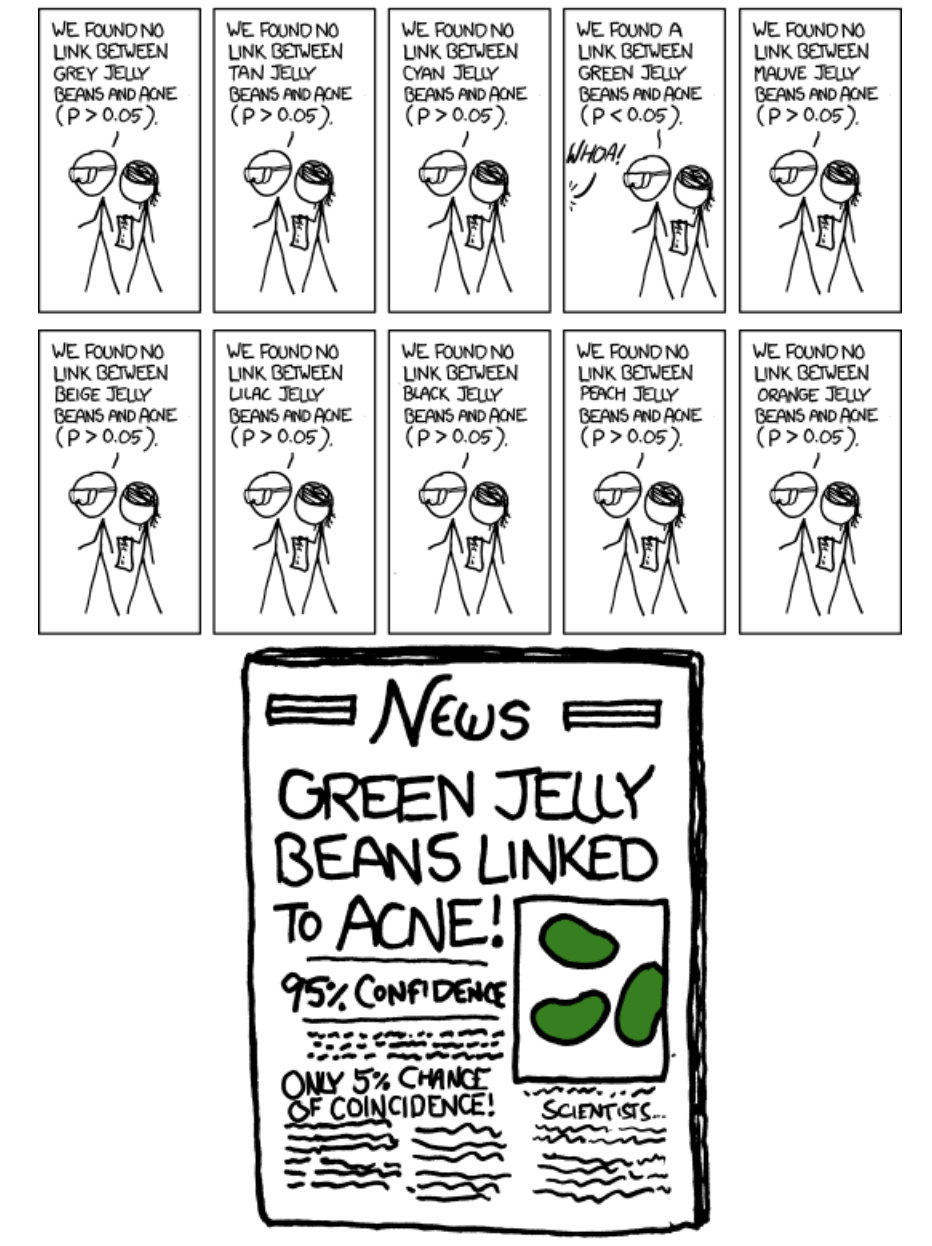

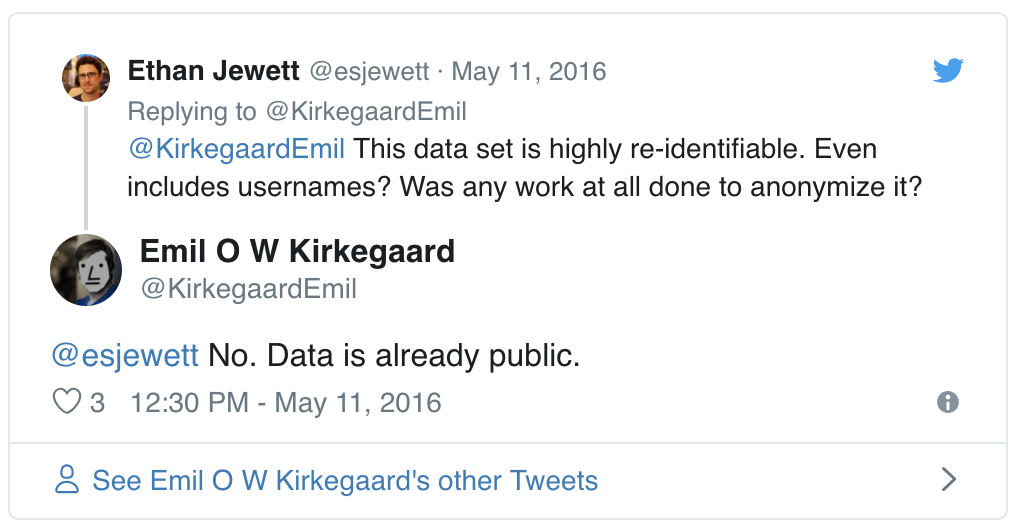

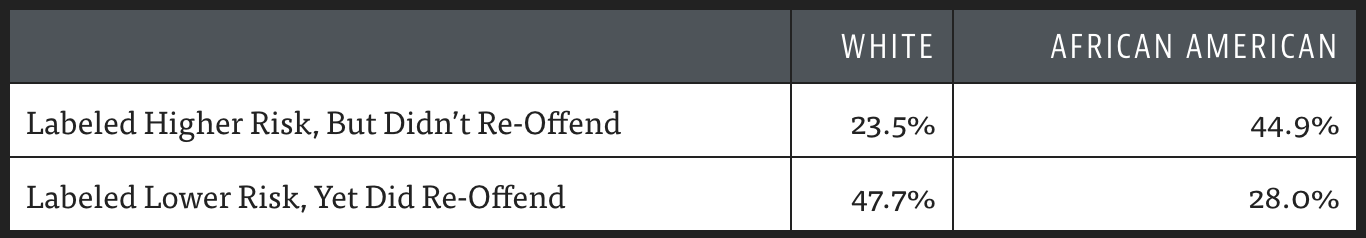

class: center, middle, inverse, title-slide # Data Science Ethics ### Dr. Maria Tackett ### 12.03.19 --- layout: true <div class="my-footer"> <span> <a href="http://datasciencebox.org" target="_blank">datasciencebox.org</a> </span> </div> --- class: middle, center ### [Click for PDF of slides](12-data-science-ethics.pdf) --- ### Announcements - Project Data Analysis **due TODAY at 11:59p** - HW 06 - due **Friday 12/6 at 11:59p** - Project final write up & presentation - due **Friday, 12/13 at 11:59p** - Presentations Saturday, 12/14 - Lab 01: 7p - 8p - Lab 02: 8p - 9p - Lab 03: 9p - 10p - Exam 02 Extra Credit + 90% response rate on course eval: +3 pts on Exam 02 grades --- ### Agenda 1. Misrepresenting data 2. Misusing p-values 3. Privacy 4. Algorithmic bias --- class: middle, center ## Misrepresenting data --- .question[ What is wrong with this graph? How would you fix it? ] .center[  ] --- .question[ What is wrong with this graph? How would you fix it? ] .center[  ] --- .question[ What is wrong with this graph? How would you fix it? ] .center[  ] --- class: middle, center ## Misusing p-values --- ### What is a p-value? .center[ <iframe src="https://fivethirtyeight.abcnews.go.com/video/embed/56150342" width="640" height="360" scrolling="no" style="border:none;" allowfullscreen></iframe> Source: ["Not Even Scientists Can Easily Explain p-values"](https://fivethirtyeight.com/features/not-even-scientists-can-easily-explain-p-values/) ] --- ### Statistically significant? .pull-left[  <small>Source: [https://xkcd.com/882/](https://xkcd.com/882/)</small> ] .pull-right[  ] --- class: middle ### *Let’s repeat it: P-values don’t necessarily tell you if an experiment “worked” or not* from [800 scientists say it’s time to abandon “statistical significance”](https://www.vox.com/latest-news/2019/3/22/18275913/statistical-significance-p-values-explained) --- ### Alternate ways to evaluate evidence - Concentrate on effect sizes - How big of a difference does an intervention make - Is it practically meaningful? - Use confidence intervals to estimate effect size - Ask whether the result is from a novel study or a replication (put some more weight into a theory many labs have looked into) - Ask whether underlying data is freely accessible (so anyone can check the math) <br><br><br> <small>Source: [800 scientists say it’s time to abandon “statistical significance”](https://www.vox.com/latest-news/2019/3/22/18275913/statistical-significance-p-values-explained) </small> --- ### Alternate ways to evaluate evidence - Use alternative statistical techniques like likelihood ratios and Bayes factors - P-values ask the question “how rare are my results?” - Likelihood ratios and Bayes factors ask the question “what is the probability my hypothesis is the best explanation for the results we found?” - You'll learn more about these in future statistics classes 😄 <br><br><br><br><br><br><br><br> <small>Source: [800 scientists say it’s time to abandon “statistical significance”](https://www.vox.com/latest-news/2019/3/22/18275913/statistical-significance-p-values-explained) </small> --- class: middle, center ## Privacy --- ### OK Cupid Data Breach - In 2016, researchers published data of 70,000 OkCupid users—including usernames, political leanings, drug usage, and intimate sexual details. >"Some may object to the ethics of gathering and releasing this data. However, all the data found in the dataset are or were already publicly available, so releasing this dataset merely presents it in a more useful form."" > Researchers Emil Kirkegaard and Julius Daugbjerg Bjerrekær - Although the researchers did not release the real names and pictures of the OkCupid users, critics noted that their identities could easily be uncovered from the details provided—such as from the usernames. <br> <small>[*OKCupid Study Reveals the Perils of Big-Data Science*](https://www.wired.com/2016/05/okcupid-study-reveals-perils-big-data-science/)</small> --- class: middle .question[ When collecting and analyzing social media data, how do you make sure you don't violate reasonable expectations of privacy? ] .center[  ] --- ### Facebook & Cambridge Analytica .center[  ] <br> [How Cambridge Analytica turned Facebook 'likes' into a lucrative political tool](https://www.theguardian.com/technology/2018/mar/17/facebook-cambridge-analytica-kogan-data-algorithm) --- class: middle, center ## Algorithmic bias --- class: middle, center ### The Hatahway Effect --- .center[  ] ["Does Anne Hathaway News Drive Berkshire Hathaway's Stock?"](https://www.theatlantic.com/technology/archive/2011/03/does-anne-hathaway-news-drive-berkshire-hathaways-stock/72661/) --- ### The Hathaway Effect - **Oct. 3, 2008** - Rachel Getting Married opens: BRK.A up .44% - **Jan. 5, 2009** - Bride Wars opens: BRK.A up 2.61% - **Feb. 8, 2010** - Valentine’s Day opens: BRK.A up 1.01% - **March 5, 2010** - Alice in Wonderland opens: BRK.A up .74% - **Nov. 24, 2010** - Love and Other Drugs opens: BRK.A up 1.62% - **Nov. 29, 2010** - Anne announced as co-host of the Oscars: BRK.A up .25% <br><br><br><br> [The Hathaway Effect: How Anne Gives Warren Buffet a Rise](https://www.huffpost.com/entry/the-hathaway-effect-how-a_b_830041) --- class: middle, center ### Algorithms in Criminal Justice --- ### Machine Bias .center[  ] There’s software used across the country to predict future criminals. And it's biased... [Pro Publica, May 23, 2016](https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing) --- class: middle >“Although these measures were crafted with the best of intentions, I am concerned that they inadvertently undermine our efforts to ensure individualized and equal justice,” he said, adding, “they may exacerbate unwarranted and unjust disparities that are already far too common in our criminal justice system and in our society.” > Then U.S. Attorney General Eric Holder (2014) --- ### ProPublica analysis **Data:** Risk scores assigned to more than 7,000 people arrested in Broward County, Florida, in 2013 and 2014 + whether they were charged with new crimes over the next two years -- **Results:** - 20% of those predicted to commit violent crimes actually did - Algorithm had higher accuracy (61%) when full range of crimes taken into account (e.g. misdemeanors)  - Algorithm was more likely to falsely flag African American defendants as future criminals, at almost twice the rate as Caucasian defendants --- class: middle Read more at [propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing](https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing). --- class: middle, center ### Amazon --- ### Amazon's Experimental Hiring Algorithm - Amazon used AI to give job candidates scores ranging from one to five stars - much like shoppers rate products on Amazon, some of the people said - Company realized its new system was not rating candidates for software developer jobs and other technical posts in a gender-neutral way - Amazon’s system taught itself that male candidates were preferable >"Gender bias was not the only issue. Problems with the data that underpinned the models’ judgments meant that unqualified candidates were often recommended for all manner of jobs, the people said." <br><br> ["Amazon scraps secret AI recruiting tool that showed bias against women"](https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G) --- ### Amazon's Experimental Hiring Algorithm .center[ <img src="img/12/tech-gender.png" width="50%" /> ] <br> ["Amazon scraps secret AI recruiting tool that showed bias against women"](https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G) --- class: middle, center ## Algorithmic Justice --- ### Algorithmic Justice .center[ <iframe width="560" height="315" src="https://www.youtube.com/embed/UG_X_7g63rY" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe> ] <br> <br> Interview with Joy Buolamwini: ['A White mask worked better': why algorithms are not colour blind](https://www.theguardian.com/technology/2017/may/28/joy-buolamwini-when-algorithms-are-racist-facial-recognition-bias) --- ### Review .question[ A company uses a machine learning algorithm to determine which job advertisement to display for users searching for technology jobs. Based on past results, the algorithm tends to display lower paying jobs for women than for men (after controlling for other characteristics). What ethical considerations might be considered when reviewing this algorithm? ] Source: *Modern Data Science with R*, by Baumer, Kaplan, and Horton --- class: center, middle ### Further study on data science ethics --- ### Further Reading .pull-left[  ] .pull-right[[*Statistical Inference in the 21st Century: A World Beyond p < 0.05*](https://www.tandfonline.com/toc/utas20/current) March 2019 issue of American Statistician (free access as student through Duke library) ] --- ### Further reading .pull-left[  ] .pull-right[ [Ethics and Data Science](https://www.amazon.com/Ethics-Data-Science-Mike-Loukides-ebook/dp/B07GTC8ZN7) by Mike Loukides, Hilary Mason, DJ Patil (free Kindle download) ] --- ### Further reading .pull-left[  ] .pull-right[ [How Charts Lie: Getting Smarter About Visual Information](https://wwnorton.com/books/9781324001560) by Alberto Cairo ] --- ### Further reading .pull-left[  ] .pull-right[ [Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy ](https://www.amazon.com/Ethics-Data-Science-Mike-Loukides-ebook/dp/B07GTC8ZN7) by Cathy O'Neil ] --- ### Further watching .center[ <iframe width="560" height="315" src="https://www.youtube.com/embed/MfThopD7L1Y" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe> Predictive Policing: Bias In, Bias Out by Kristian Lum ] --- ### Parting thoughts - At some point during your data science journey you will learn tools that can be used unethically - You might also be tempted to use your knowledge in a way that is ethically questionable either because of business goals or for the pursuit of further knowledge (or because your boss told you to do so) .question[ How do you train yourself to make the right decisions (or reduce the likelihood of accidentally making the wrong decisions) at those points? ] --- ### Do good with data - Data Science for Social Good: http://www.dssgfellowship.org/ - DataKind: https://www.datakind.org/ - Data Values & Practices: https://datapractices.org/manifesto/ --- ### Acknowledgement These slides were largely based on [*Data Science Ethics*](http://www2.stat.duke.edu/courses/Fall18/sta112.01/slides/u3_d01-ethics/u3_d01-ethics.html)