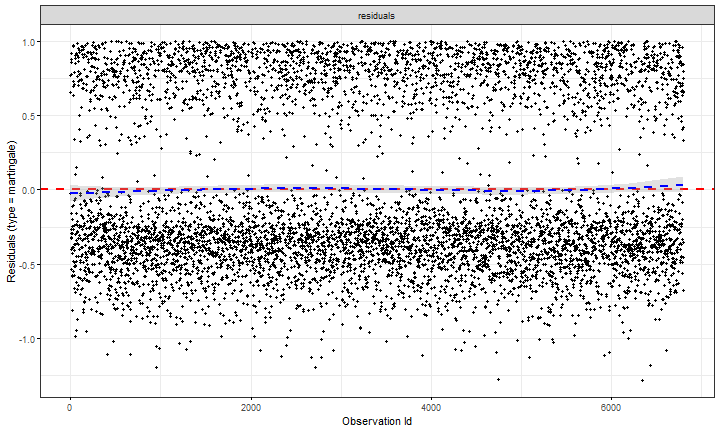

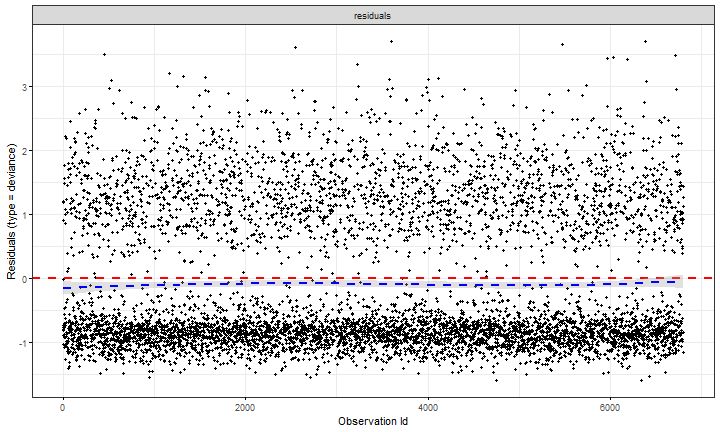

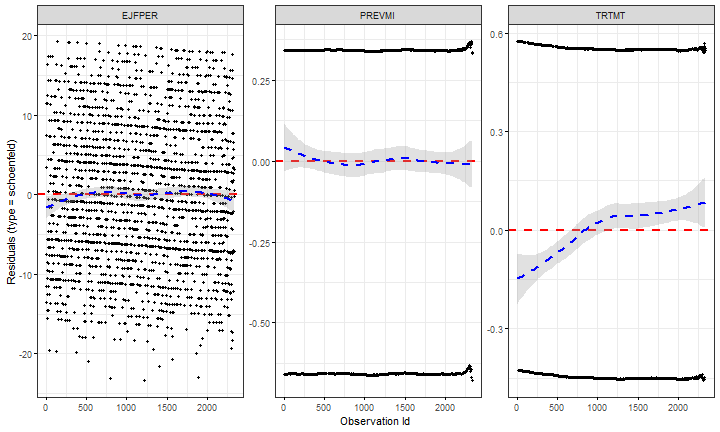

class: center, middle, inverse, title-slide .title[ # Diagnostics for the Cox model ] .author[ ### Yue Jiang ] .date[ ### STA 490/690 ] --- ### The Cox proportional hazards model `\begin{align*} \lambda(t) &= \lambda_0(t)\exp\left(\beta_1x_1 + \beta_2x_2 + \cdots + \beta_px_p \right) \end{align*}` ```r coxm1 <- coxph(Surv(DWHFDAYS, DWHF) ~ TRTMT + EJFPER + PREVMI, data = dig) summary(coxm1) ``` ``` ## Call: ## coxph(formula = Surv(DWHFDAYS, DWHF) ~ TRTMT + EJFPER + PREVMI, ## data = dig) ## ## n= 6799, number of events= 2332 ## (1 observation deleted due to missingness) ## ## coef exp(coef) se(coef) z Pr(>|z|) ## TRTMT -0.289745 0.748454 0.041666 -6.954 3.55e-12 *** ## EJFPER -0.038061 0.962654 0.002381 -15.985 < 2e-16 *** ## PREVMI 0.047921 1.049087 0.043638 1.098 0.272 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## exp(coef) exp(-coef) lower .95 upper .95 ## TRTMT 0.7485 1.3361 0.6898 0.8121 ## EJFPER 0.9627 1.0388 0.9582 0.9672 ## PREVMI 1.0491 0.9532 0.9631 1.1428 ## ## Concordance= 0.605 (se = 0.006 ) ## Likelihood ratio test= 304.4 on 3 df, p=<2e-16 ## Wald test = 302.5 on 3 df, p=<2e-16 ## Score (logrank) test = 306.2 on 3 df, p=<2e-16 ``` --- ### Review from the AFT model... If `\(T_i\)` has survival function `\(S_i(t)\)`, then `\(S_i(T_i)\)` should have a uniform distribution on (0, 1) and `\(\Lambda_i(T_i)\)` should follow Exp(1) distribution by change of variables. Thus, if the model is correct, then the estimated cumulative hazard `\(\hat{\Lambda}\)` at observed times should be a censored sample from Exp(1). Plotting `\(\log(-\log\hat{S}(Y_i))\)` against `\(\log(Y_i)\)` should thus follow a straight line through the origin at a 45-degree angle. I didn't give these things a name last time, but these `\(\hat{\Lambda}_i(Y_i)\)` are known as .vocab[Cox-Snell residuals]. --- ### Cox-Snell residuals Cox-Snell residuals give us an idea of whether we might have incorrectly specified a model, but in the case that we *don't* have a good fit, looking at the Cox-Snell residuals doesn't tell us *why* our fit is bad, or *for whom* the model might be predicting poorly. .question[ Think back to the notion of a residual in linear regression models you have seen previously. How have you used residuals? What was their interpretation? Can you think of a way to come up with a "similar" concept for time-to-event models? ] --- ### Ok we finally can't avoid these (ignore covariates for now; independently right-censored data only). A .vocab[martingale] is a stochastic process where the conditional expectation of the "next value," conditionally on everything that's happened so far, is equal to its current value (assumed to be finite). The notion of "all the information we've gathered so far" includes *everything* in the past, not just one step prior. --- ### Three processes The .vocab[counting process] `\(N_i(t)\)` is a right-continuous step function that starts at 0 and jumps to 1 when individual `\(i\)` fails. The .vocab[at-risk process] `\(Y_i(t)\)` is a right-continuous step function that starts at 1 and drops to 0 when individual `\(i\)` is no longer at risk of failing (that is, fails or is censored). Let `\(N(t) = \sum N_i(t)\)` be the total number of failures by time `\(t\)` and similarly `\(Y(t)\)` the total number of individuals still at risk at time `\(t\)`. .question[ What is `\(Y(t)\lambda(t)dt\)`, where `\(\lambda(t)dt = P(t \le T < t + \delta t | T \ge t)\)`? ] --- ### The intensity process The intensity process `\(Y(t)\lambda(t)dt\)` is the expected number of deaths at time `\(t\)` (think about binomial expectation). .question[ Now, integrate what happens time `\(0\)` through `\(t\)`: `\(A(t) = \int_0^t Y(u)\lambda(u)du\)`. What does this quantity represent? ] (I'm using `\(A\)` instead of `\(\Lambda\)` just for tradition, honestly) --- ### The martingale `\(M = N - A\)` We can show that `\(E(N(t)) = E(A(t))\)` (justify this to yourself by waving your hands really hard, or if you've taken stochastic processes, prove it!). The zero-mean process `\(N(t) - A(t)\)` is a martingale. .question[ How can we bring in covariates? How might we use this intuition to think about a residual? ] --- ### Martingale residuals Given covariates, the estimated cumulative intensity process for individual `\(i\)` is: `\(\displaystyle \widehat{A}_i(t | \mathbf{x}_i, \widehat{\boldsymbol\beta}) = \int_0^t Y_i(u)\exp(\mathbf{x}_i\widehat{\boldsymbol\beta})\widehat{\lambda}_0(u)du\)` We can use the construction on the previous slides to define .vocab[martingale residuals], which are based on the difference between the observed number of events up until time `\(t\)` and the expected count based on the fitted model. .vocab[Deviance residuals] are a normalized transformation of the martingale residuals that correct their skewness. They are randomly distributed around mean 0 with a variance of 1. In practice, these residuals are useful for finding potential outliers: negative values "lived longer than expected" and positive values "died too soon." --- ### Cox model diagnostics ```r library(survminer) ggcoxdiagnostics(coxm1, type = "martingale", linear.predictions = F) ``` <!-- --> --- ### Cox model diagnostics ```r library(survminer) ggcoxdiagnostics(coxm1, type = "deviance", linear.predictions = F) ``` <!-- --> --- ### Proportional hazards Now let's think about proportional hazards. What does the proportional hazards assumption mean? .question[ Consider a single covariate. Can you come up with an intuitive way to assess whether proportional hazards might be violated for that variable? ] --- ### A thought experiment **If proportional hazards is satisfied,** what is the probability that an arbitrary individual `\(j\)` fails at time `\(t_j\)`? `\begin{align*} \frac{\exp(\mathbf{x}_j\boldsymbol\beta)}{\sum_{k \in \mathcal{R}(t_j)}\exp(\mathbf{x}_k\boldsymbol\beta)} \end{align*}` .question[ Consider one of the covariates, let's call it `\(x_q\)`. Consider the risk set of patients at time `\(t_j\)`. What is the expected value of `\(x_q\)` at time `\(t_j\)`? ] --- ### A thought experiment `\begin{align*} E(x_{i,q} | T_i \ge t_j, C_i \ge t_j) = \sum_{j \in \mathcal{R}(t_j)}x_{j,q}\frac{\exp(\mathbf{x}_j\boldsymbol\beta)}{\sum_{k \in \mathcal{R}(t_j)}\exp(\mathbf{x}_k\boldsymbol\beta)} \end{align*}` Note that this expression doesn't depend on time at all, only the risk set itself. .question[ Ok, now what do we do with this? ] --- ### Schoenfeld residuals The .vocab[Schoenfeld residuals] for variable `\(x_q\)` at time `\(t_j\)` are the differences in the observed *covariate values* of `\(x_q\)` vs. the expected covariate value in the risk set at that time. As it turns out, proportional hazards is violated if a model with the same covariates, but time-varying *coefficients* (that is, allowing `\(\beta\)` terms to be a function of time instead of constant) might have evidence of fitting better. Schoenfeld residuals are related to whether a time-varying coefficient model might fit better (see Grambsch and Therneau, 1994). --- ### Schoenfeld residuals To summarize, each Schoenfeld residual is the difference between the observed covariate and the expected covariate for each observed failure, conditioned on the risk set at that time. We are essentially seeing how covariate values for subjects that fail might differ from a weighted average of those in the risk set, and looking at this discrepancy through time. --- ### Schoenfeld residuals In plotting Schoenfeld residuals vs. survival times, we expect them to have no autocorrelation through time, randomly distributed around 0. This plot essentially tells us about potential time-dependence of estimated regression coefficients, which we don't want to see (otherwise PH is violated). (most software packages actually give a scaled Schoenfeld residual, scaled by the inverse covariance matrix in order to downweight observations we're less sure about) --- ### Cox model diagnostics ```r library(survminer) ggcoxdiagnostics(coxm1, type = "schoenfeld") ``` <!-- -->