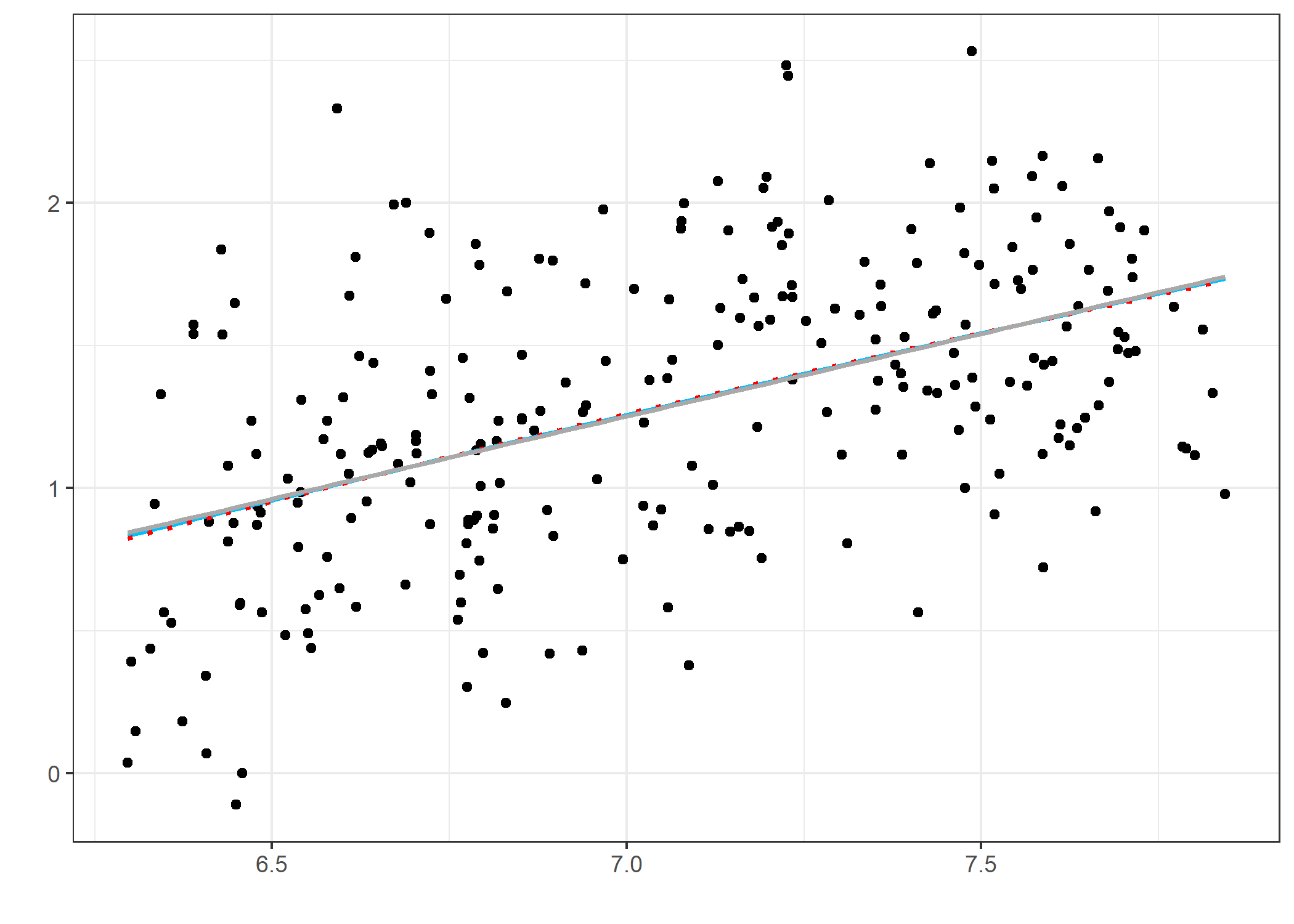

class: center, middle, inverse, title-slide # Comparing models ### Yue Jiang ### STA 210 / Duke University / Spring 2024 --- ### Comparing models? .question[ Suppose you had two models (that aimed to do the same thing). In what sorts of ways might you compare the models? How might one model be "better" (whatever this means) than another? Think about how models are being *used* (for explanation and prediction, etc.). ] --- ### Interpretation? ```r ggplot(data = temp, aes(x = x, y = y)) + geom_point() + theme_bw() + labs(x = "", y = "") + stat_smooth(method = "lm", formula = y ~ I(x^0.5), se = F, color = "deepskyblue") + stat_smooth(method = "lm", formula = y ~ log(x), lty = 3, se = F, color = "red") + stat_smooth(method = "lm", formula = y ~ x, se = F, color = "darkgray") ``` --- ### Interpretation? <!-- --> --- ### Interpretation? ```r tidy(m1) ``` ``` ## # A tibble: 2 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) -6.93 0.919 -7.54 8.79e-13 ## 2 I(x^0.5) 3.09 0.346 8.94 8.97e-17 ``` ```r tidy(m2) ``` ``` ## # A tibble: 2 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) -6.76 0.895 -7.56 7.89e-13 ## 2 log(x) 4.12 0.458 9.00 6.15e-17 ``` ```r tidy(m3) ``` ``` ## # A tibble: 2 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) -2.81 0.461 -6.08 4.40e- 9 ## 2 x 0.580 0.0652 8.89 1.32e-16 ``` --- ### Interpretation? Model 1 (square transform on `\(x\)`): `\begin{align*} \widehat{y}_i &= -6.93 + 3.09~\sqrt{x}_i \end{align*}` Model 2 (log transform on `\(x\)`): `\begin{align*} \widehat{y}_i &= -6.76 + 4.12~\log{x}_i \end{align*}` Model 3 (just a linear model): `\begin{align*} \widehat{y}_i &= -2.81 + 0.58~x_i \end{align*}` .question[ How would you interpret the slope estimate for `\(x\)` in each of these models? Is there any you might prefer? ] --- ### Other metrics? .vocab[Mean squared error] (MSE) is given by `\begin{align*} MSE = \frac{1}{n}\sum_{i = 1}^n (y_i - \widehat{y}_i)^2 \end{align*}` .question[ In plain English, what is the mean squared error? Why might we also be interested in the .vocab[root mean squared error] (RMSE), which is the square root of the MSE? ] --- ### Other metrics? ```r m1_aug <- augment(m1); m2_aug <- augment(m2); m3_aug <- augment(m3) rmse(m1_aug, truth = y, estimate = .fitted) ``` ``` ## # A tibble: 1 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 0.441 ``` ```r rmse(m2_aug, truth = y, estimate = .fitted) ``` ``` ## # A tibble: 1 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 0.440 ``` ```r rmse(m3_aug, truth = y, estimate = .fitted) ``` ``` ## # A tibble: 1 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 0.441 ``` --- ### Overall F-test for the model `\begin{align*} y_i &= \beta_0 + \beta_1x_{i1} + \cdots + \beta_px_{ip} + \epsilon \end{align*}` `\begin{align*} H_0: \beta_1 = \cdots = \beta_p = 0\\ H_1: \text{at least one }~\beta_k \neq 0 \end{align*}` .question[ What are the F test hypotheses in plain English? ] --- ### Overall F-test for the model ``` ## ## Call: ## lm(formula = y ~ x) ## ## Residuals: ## Min 1Q Median 3Q Max ## -1.0415 -0.3251 0.0177 0.2917 1.3161 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -2.8060 0.4612 -6.08 4.4e-09 *** ## x 0.5797 0.0652 8.89 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.443 on 248 degrees of freedom ## Multiple R-squared: 0.242, Adjusted R-squared: 0.238 ## F-statistic: 79 on 1 and 248 DF, p-value: <2e-16 ``` --- ### Comparing nested models Two linear models are .vocab[nested] if one of the models consists solely of terms found "within" another. For instance: `\begin{align*} y_i &= \beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + \epsilon_i\\ y_i &= \beta_0 + \beta_1x_{i1} + \epsilon_i \end{align*}` or `\begin{align*} y_i &= \beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + \beta_3x_{i3} + \beta_4x_{i1}x_{i2} + \epsilon_i\\ y_i &= \beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + \beta_3x_{i3} + \epsilon_i \end{align*}` We can test *groups* of nested variables as well (why might we want to do this?): `\begin{align*} y_i &= \beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + \beta_3x_{i3} + \beta_4x_{i1}x_{i2} + \epsilon_i\\ y_i &= \beta_0 + \beta_1x_{i1} + \epsilon_i \end{align*}` --- ### The nested F test ``` ## ## Call: ## lm(formula = PM2.5 ~ TEMP, data = dat) ## ## Residuals: ## Min 1Q Median 3Q Max ## -103.0 -57.2 -21.6 34.1 616.6 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 94.1178 0.7030 133.9 <2e-16 *** ## TEMP -0.8896 0.0394 -22.6 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 79.8 on 31813 degrees of freedom ## Multiple R-squared: 0.0158, Adjusted R-squared: 0.0158 ## F-statistic: 511 on 1 and 31813 DF, p-value: <2e-16 ``` --- ### The nested F test ``` ## ## Call: ## lm(formula = PM2.5 ~ TEMP + PRES + RAIN + wd, data = dat) ## ## Residuals: ## Min 1Q Median 3Q Max ## -145.4 -48.7 -18.3 29.2 615.1 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 2902.2107 74.6836 38.86 < 2e-16 *** ## TEMP -3.1551 0.0676 -46.69 < 2e-16 *** ## PRES -2.7210 0.0731 -37.24 < 2e-16 *** ## RAIN -2.5668 0.5036 -5.10 3.5e-07 *** ## wdENE -5.8459 1.9820 -2.95 0.0032 ** ## wdESE 0.2874 2.4198 0.12 0.9054 ## wdN -52.7173 2.3173 -22.75 < 2e-16 *** ## wdNE -18.0660 1.8951 -9.53 < 2e-16 *** ## wdNNE -40.6988 2.2086 -18.43 < 2e-16 *** ## wdNNW -72.1930 2.5190 -28.66 < 2e-16 *** ## wdNW -70.6412 2.3786 -29.70 < 2e-16 *** ## wdS -5.1466 2.6508 -1.94 0.0522 . ## wdSE -2.8372 2.6250 -1.08 0.2798 ## wdSSE -1.4042 2.8888 -0.49 0.6269 ## wdSSW -10.7876 2.3119 -4.67 3.1e-06 *** ## wdSW -20.0180 2.0566 -9.73 < 2e-16 *** ## wdW -32.7593 2.7593 -11.87 < 2e-16 *** ## wdWNW -52.2191 2.8248 -18.49 < 2e-16 *** ## wdWSW -27.7496 2.2769 -12.19 < 2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 75 on 31796 degrees of freedom ## Multiple R-squared: 0.132, Adjusted R-squared: 0.131 ## F-statistic: 268 on 18 and 31796 DF, p-value: <2e-16 ``` --- ### The nested F test ```r anova(m0, m1, m2) # /3/ nested models! ``` ``` ## Analysis of Variance Table ## ## Model 1: PM2.5 ~ TEMP ## Model 2: PM2.5 ~ TEMP + PRES + RAIN ## Model 3: PM2.5 ~ TEMP + PRES + RAIN + wd ## Res.Df RSS Df Sum of Sq F Pr(>F) ## 1 31813 2.03e+08 ## 2 31811 1.94e+08 2 8501865 756 <2e-16 *** ## 3 31796 1.79e+08 15 15376534 182 <2e-16 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` .question[ How do we interpret this table? ] --- ### An old model... `\begin{align*} \widehat{\log_{10}(price)}_i = 2.34 + 1.78~carat_i - 0.44(carat_i)^2 \end{align*}` .question[ We know that this model has a *horrible* interpretation in terms of clearly explaining how carat and price might relate to each other. However, it *did* do pretty well in terms of fitting the data (and thus in terms of prediction). How might we quantify how well our model is doing in terms of prediction? ] --- ### Loss functions Remember that we are predicting the outcome of each of our outcome variables `\(y_i\)`, with a *linear* prediction made from some model `\(\hat{\beta}_0 + \hat{\beta}_1x_i\)` But how do we tell whether we've made a "good" prediction or not? What do we count as good? Keep in mind the notion of the **residual**: `\begin{align*} \hat{\epsilon}_i &= y_i - \left(\hat{\beta}_0 + \hat{\beta}_1x_i\right) \\ &= y_i - \hat{y}_i \end{align*}` --- ### Some loss functions What might be some candidate loss functions? `\begin{align*} f(y_1, \cdots, y_n, \hat{y}_1, \cdots, \hat{y}_n) &= \frac{1}{n} \sum_{i = 1}^n \mathbf{1}_{\hat{y}_i \neq 17} &?\\ f(y_1, \cdots, y_n, \hat{y}_1, \cdots, \hat{y}_n) &= \frac{1}{n} \sum_{i = 1}^n \mathbf{1}_{y_i \neq \hat{y}_i} &?\\ f(y_1, \cdots, y_n, \hat{y}_1, \cdots, \hat{y}_n) &= \frac{1}{n} \sum_{i = 1}^n \Big | y_i - \hat{y}_i \Big | &?\\ f(y_1, \cdots, y_n, \hat{y}_1, \cdots, \hat{y}_n) &= \frac{1}{n} \sum_{i = 1}^n \left(y_i - \hat{y}_i\right)^2 &?\\ f(y_1, \cdots, y_n, \hat{y}_1, \cdots, \hat{y}_n) &= \frac{1}{n} \sum_{i = 1}^n \log \left( \frac{1 + \exp(2(y_i - \hat{y}_i))}{2\exp(y_i - \hat{y}_i)} \right) &? \end{align*}` --- ### The Mean Squared Error .vocab[Mean squared error] (MSE) of our predictions is given by `\begin{align*} MSE = \frac{1}{n}\sum_{i = 1}^n (y_i - \widehat{y}_i)^2 \end{align*}` .question[ In plain English, what is the mean squared error? Why does this type of loss function make sense? Should we always choose the model that minimizes the MSE? ] --- ### What about this model? <img src="cross-validation_files/figure-html/unnamed-chunk-3-1.png" width="90%" style="display: block; margin: auto;" /> --- ### What about this model? <img src="cross-validation_files/figure-html/unnamed-chunk-4-1.png" width="90%" style="display: block; margin: auto;" /> --- ### Comparing the two models .question[ Which model has the lower MSE? Which would you rather use for interpretation? Which would you rather use for prediction? Why? ] --- ### What about this model? <img src="cross-validation_files/figure-html/unnamed-chunk-5-1.png" width="90%" style="display: block; margin: auto;" /> --- ### What went wrong? --- ### Cross-validation .vocab[Cross-validation] is a concept that revolves around how well our model performs *outside* of the data that it was fit on. It's generally used in **prediction** settings to assess how well our model might work in a set of "previously unseen" data. In cross-validation techniques, a model is first fit on a .vocab[training] set, and then is evaluated on an "independent" .vocab[testing] set that was never. used to fit any of the models. The goal of these types of methods is often to avoid .vocab[overfitting], which might occur, for instance, if we're adding too many variables, etc. --- ### Cross-validation .pull-left[ .vocab[Exhaustive] splits - Leave `\(p\)` out cross-validation: find all possible ways to create *testing* sets of size `\(p\)` - Leave one out cross-validation: same as above, but with an testing set of size `\(p = 1\)` ] .pull-right[ .vocab[Non-exhaustive] splits - Simple holdout cross-validation: only create one testing split - Monte Carlo cross-validation: same as above, but done multiple times with random testing sets each time - `\(k\)`-fold cross-validation: randomly split the dataset into `\(k\)` mutually exclusive but collective exhaustive subsets; use each of these `\(k\)` subsets as a test set ] .centering[**See board**] --- ### Cross-validation .question[ What are some of the advantages and disadvantages of each of these methods? ] You might see a bunch of lingo out there, but all of these methods are similar in spirit in using independent testing sets and trying to maximize predictive power while avoiding overfitting in unseen data --- ### Some candidate models ```r m1 <- lm(log(price) ~ color + clarity + carat, data = diamonds) m2 <- lm(log(price) ~ color + clarity + carat + cut, data = diamonds) m1_aug <- augment(m1) m2_aug <- augment(m2) ``` --- ### Some candidate models ```r rmse(m1_aug, truth = `log(price)`, estimate = .fitted) ``` ``` ## # A tibble: 1 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 0.319 ``` ```r rmse(m2_aug, truth = `log(price)`, estimate = .fitted) ``` ``` ## # A tibble: 1 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 0.319 ``` --- ### Simple holdout method ```r set.seed(123) dim(diamonds) ``` ``` ## [1] 2000 10 ``` ```r indices <- sample(1:2000, size = 2000 * 0.8, replace = F) train.data <- diamonds %>% slice(indices) test.data <- diamonds %>% slice(-indices) dim(train.data) ``` ``` ## [1] 1600 10 ``` ```r dim(test.data) ``` ``` ## [1] 400 10 ``` --- ### Simple holdout method ```r m1 <- lm(log(price) ~ color + clarity + carat, data = train.data) m2 <- lm(log(price) ~ color + clarity + carat + cut, data = train.data) m1_pred <- predict(m1, test.data) m2_pred <- predict(m2, test.data) ``` --- ### Simple holdout method ```r head(m1_pred) ``` ``` ## 1 2 3 4 5 6 ## 6.945904 7.315938 10.278735 8.451798 7.609596 8.781945 ``` ```r head(log(test.data$price)) ``` ``` ## [1] 6.569481 7.419381 9.765489 8.620832 7.649693 8.892062 ``` ```r head(log(test.data$price) - m1_pred) ``` ``` ## 1 2 3 4 5 6 ## -0.37642278 0.10344306 -0.51324546 0.16903378 0.04009678 0.11011630 ``` --- ### Simple holdout method ```r sqrt( mean( (log(test.data$price) - m1_pred)^2 ) ) ``` ``` ## [1] 0.3188945 ``` ```r sqrt( mean( (log(test.data$price) - m2_pred)^2 ) ) ``` ``` ## [1] 0.3191523 ``` --- ### Leave one out CV ```r library(caret) cv_method <- trainControl(method = "LOOCV") m1 <- train(log(price) ~ color + clarity + carat, data = diamonds, method = "lm", trControl = cv_method) m2 <- train(log(price) ~ color + clarity + carat + cut, data = diamonds, method = "lm", trControl = cv_method) ``` --- ### Leave one out CV ```r print(m1) ``` ``` ## Linear Regression ## ## 2000 samples ## 3 predictor ## ## No pre-processing ## Resampling: Leave-One-Out Cross-Validation ## Summary of sample sizes: 1999, 1999, 1999, 1999, 1999, 1999, ... ## Resampling results: ## ## RMSE Rsquared MAE ## 0.3218101 0.899073 0.2599859 ## ## Tuning parameter 'intercept' was held constant at a value of TRUE ``` --- ### Leave one out CV ```r print(m2) ``` ``` ## Linear Regression ## ## 2000 samples ## 4 predictor ## ## No pre-processing ## Resampling: Leave-One-Out Cross-Validation ## Summary of sample sizes: 1999, 1999, 1999, 1999, 1999, 1999, ... ## Resampling results: ## ## RMSE Rsquared MAE ## 0.3220167 0.8989444 0.2605107 ## ## Tuning parameter 'intercept' was held constant at a value of TRUE ``` --- ### Leave `\(p\)` out CV ...we're not going to do this because of computational costs. For instance, for `\(p = 2\)`, there are almost 2 million ways to create unique test sets; for `\(p = 3\)`, there are over 1.3 billion ways to do so (given our 2,000 observations). --- ### K-fold CV ```r library(caret) set.seed(123) cv_method <- trainControl(method = "cv", number = 10) m1 <- train(log(price) ~ color + clarity + carat, data = diamonds, method = "lm", trControl = cv_method) m2 <- train(log(price) ~ color + clarity + carat + cut, data = diamonds, method = "lm", trControl = cv_method) ``` --- ### K-fold CV ```r print(m1) ``` ``` ## Linear Regression ## ## 2000 samples ## 3 predictor ## ## No pre-processing ## Resampling: Cross-Validated (10 fold) ## Summary of sample sizes: 1800, 1800, 1800, 1800, 1800, 1800, ... ## Resampling results: ## ## RMSE Rsquared MAE ## 0.321648 0.9010039 0.2599949 ## ## Tuning parameter 'intercept' was held constant at a value of TRUE ``` --- ### K-fold CV ```r print(m2) ``` ``` ## Linear Regression ## ## 2000 samples ## 4 predictor ## ## No pre-processing ## Resampling: Cross-Validated (10 fold) ## Summary of sample sizes: 1800, 1800, 1800, 1800, 1800, 1800, ... ## Resampling results: ## ## RMSE Rsquared MAE ## 0.3210479 0.9008995 0.2601354 ## ## Tuning parameter 'intercept' was held constant at a value of TRUE ``` --- ### Repeated K-fold CV ```r library(caret) set.seed(123) cv_method <- trainControl(method = "cv", number = 10, repeats = 5) m1 <- train(log(price) ~ color + clarity + carat, data = diamonds, method = "lm", trControl = cv_method) m2 <- train(log(price) ~ color + clarity + carat + cut, data = diamonds, method = "lm", trControl = cv_method) ``` --- ### Repeated k-fold CV ```r print(m1) ``` ``` ## Linear Regression ## ## 2000 samples ## 3 predictor ## ## No pre-processing ## Resampling: Cross-Validated (10 fold) ## Summary of sample sizes: 1800, 1800, 1800, 1800, 1800, 1800, ... ## Resampling results: ## ## RMSE Rsquared MAE ## 0.321648 0.9010039 0.2599949 ## ## Tuning parameter 'intercept' was held constant at a value of TRUE ``` --- ### Repeated k-fold CV ```r print(m2) ``` ``` ## Linear Regression ## ## 2000 samples ## 4 predictor ## ## No pre-processing ## Resampling: Cross-Validated (10 fold) ## Summary of sample sizes: 1800, 1800, 1800, 1800, 1800, 1800, ... ## Resampling results: ## ## RMSE Rsquared MAE ## 0.3210479 0.9008995 0.2601354 ## ## Tuning parameter 'intercept' was held constant at a value of TRUE ``` --- ### Comparing our models ```r table(diamonds$cut) ``` ``` ## ## Fair Good Very Good Premium Ideal ## 61 199 452 505 783 ``` .question[ How many more model parameters have to be estimated? Given what we've seen, which of these two models might you use? In what settings? ]