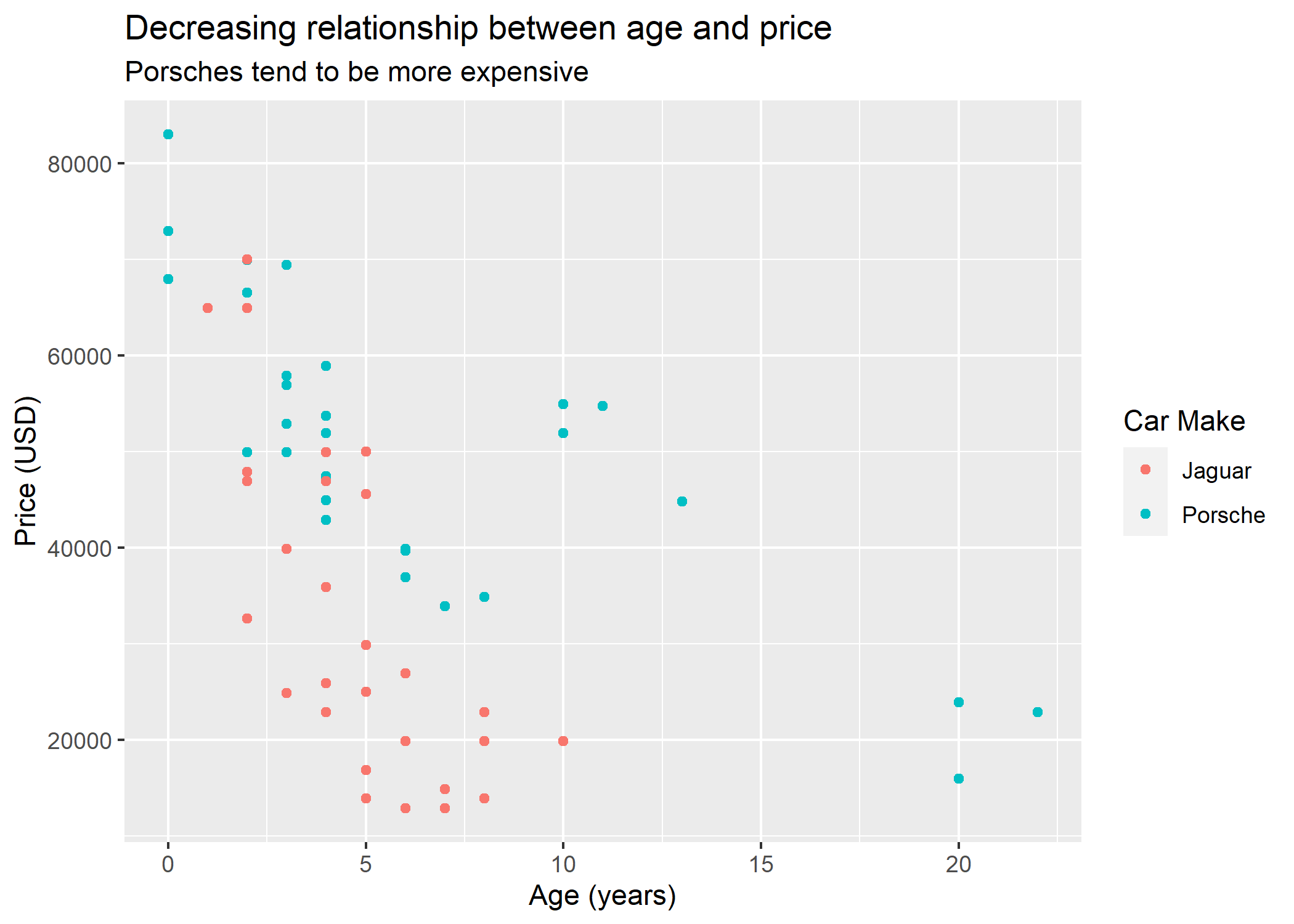

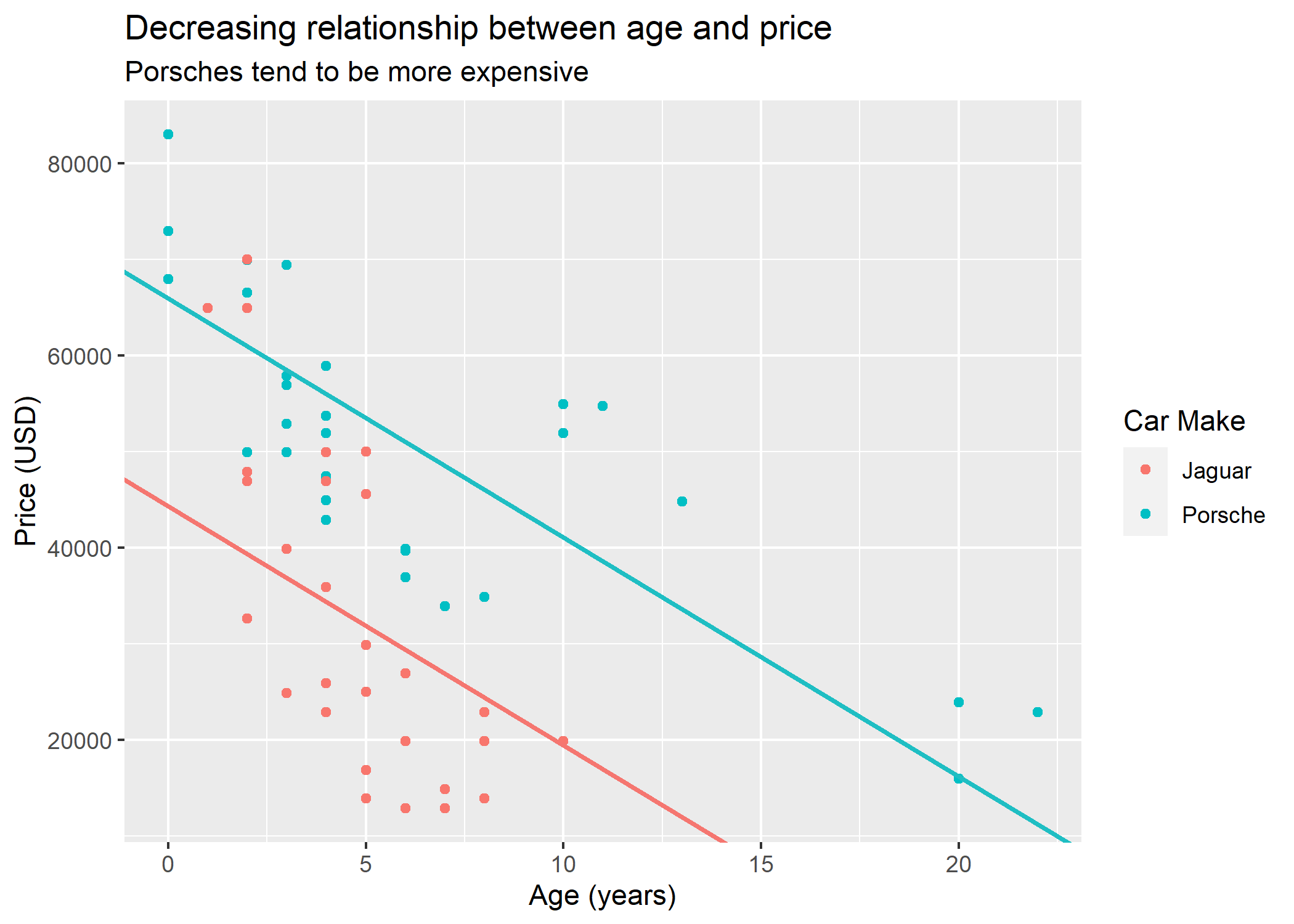

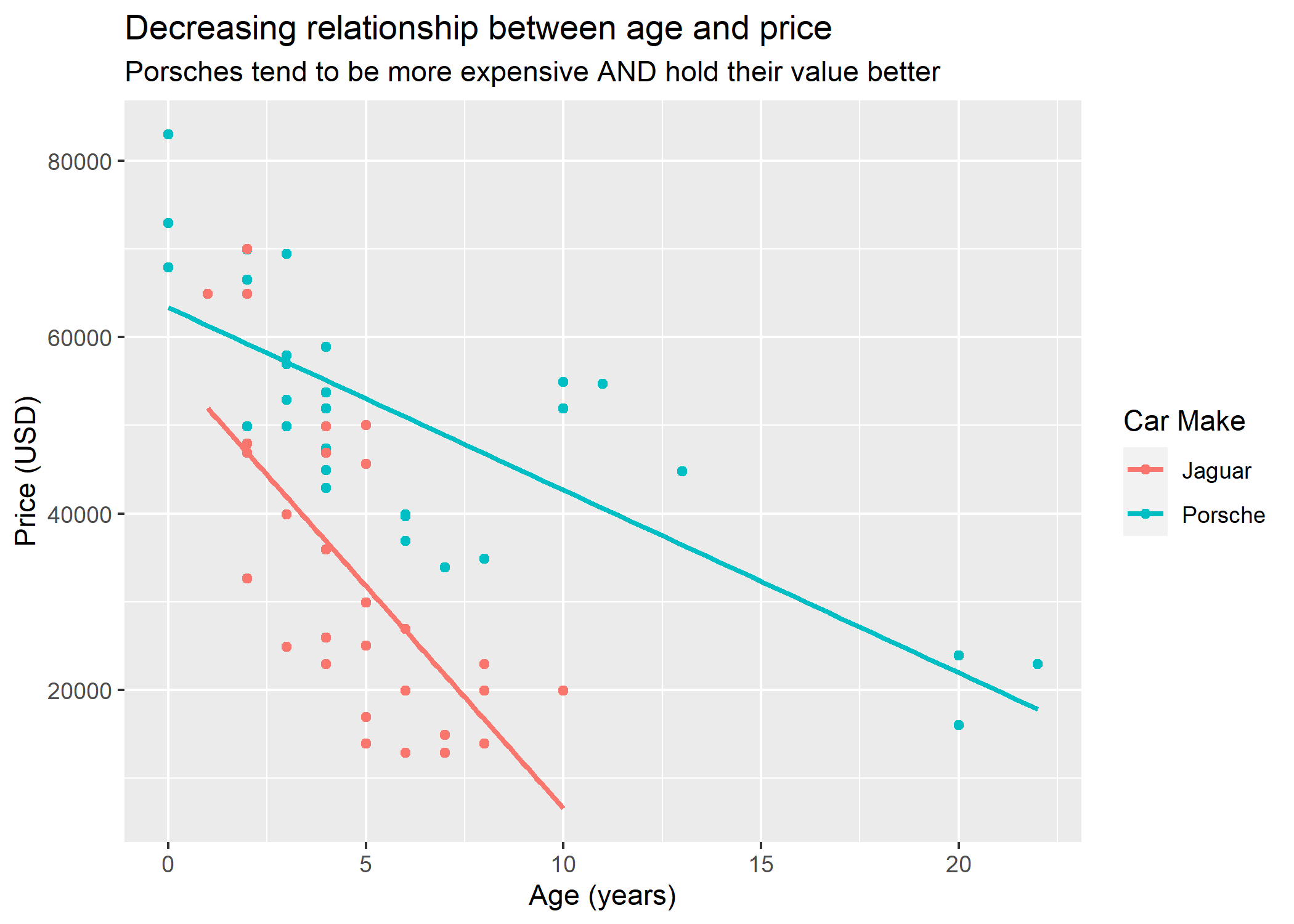

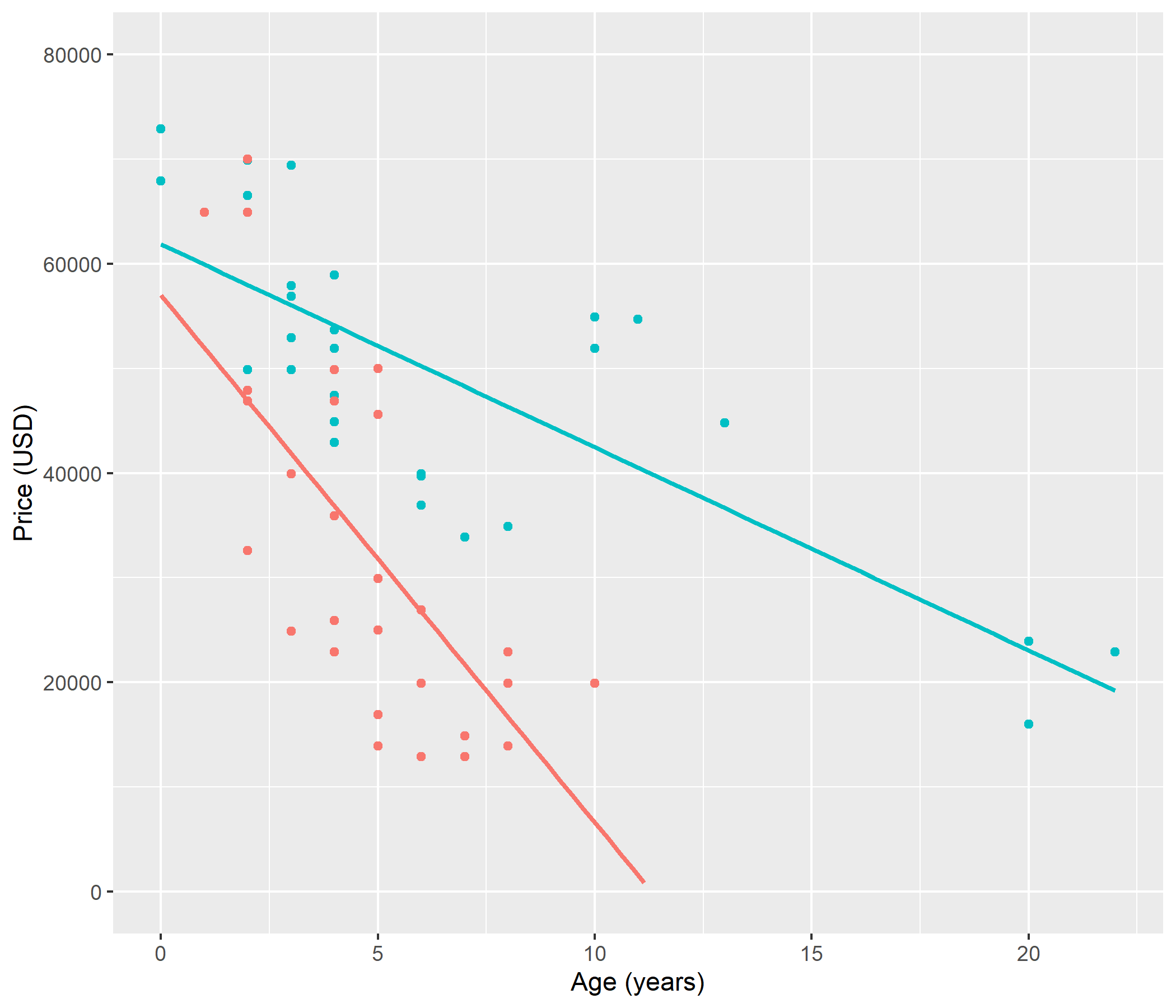

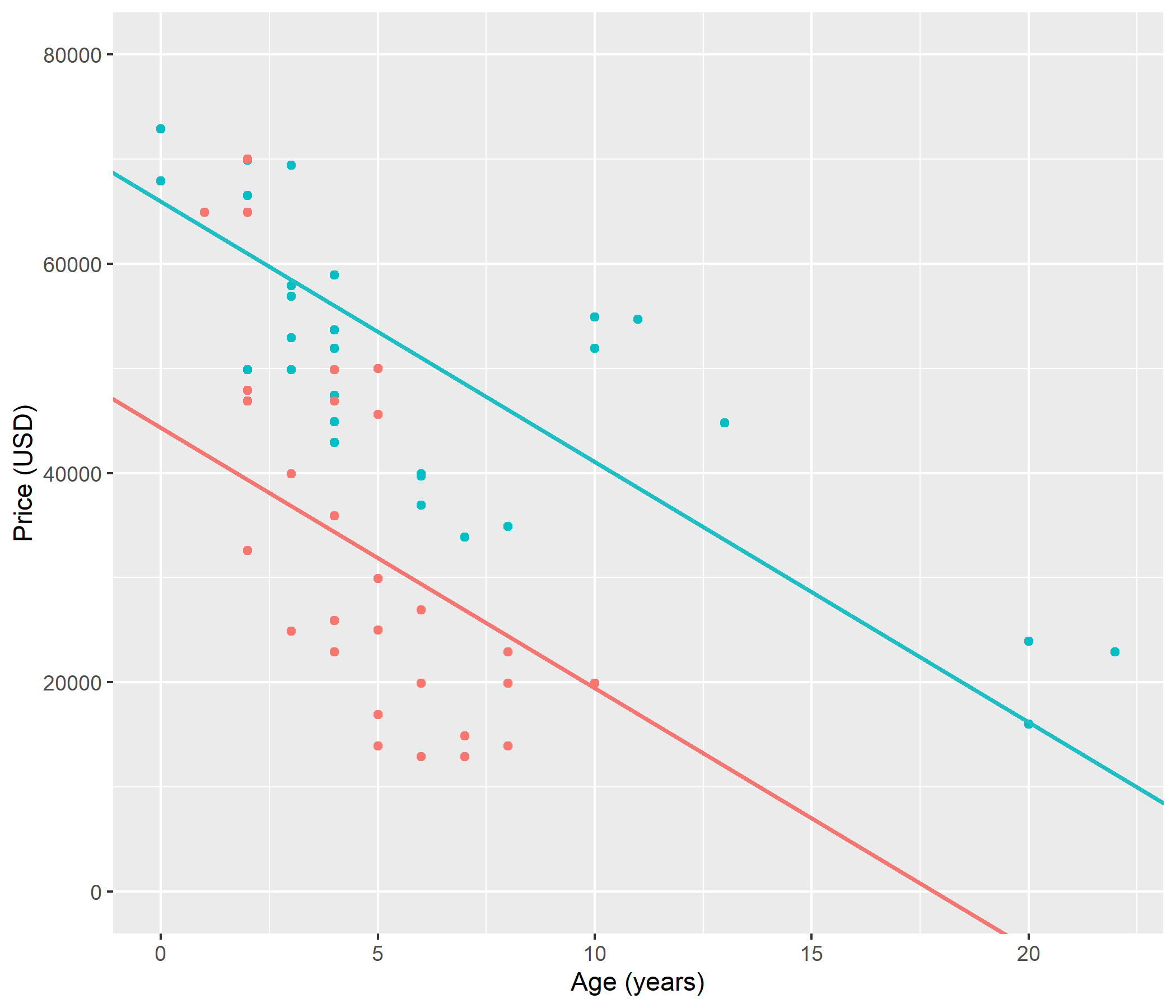

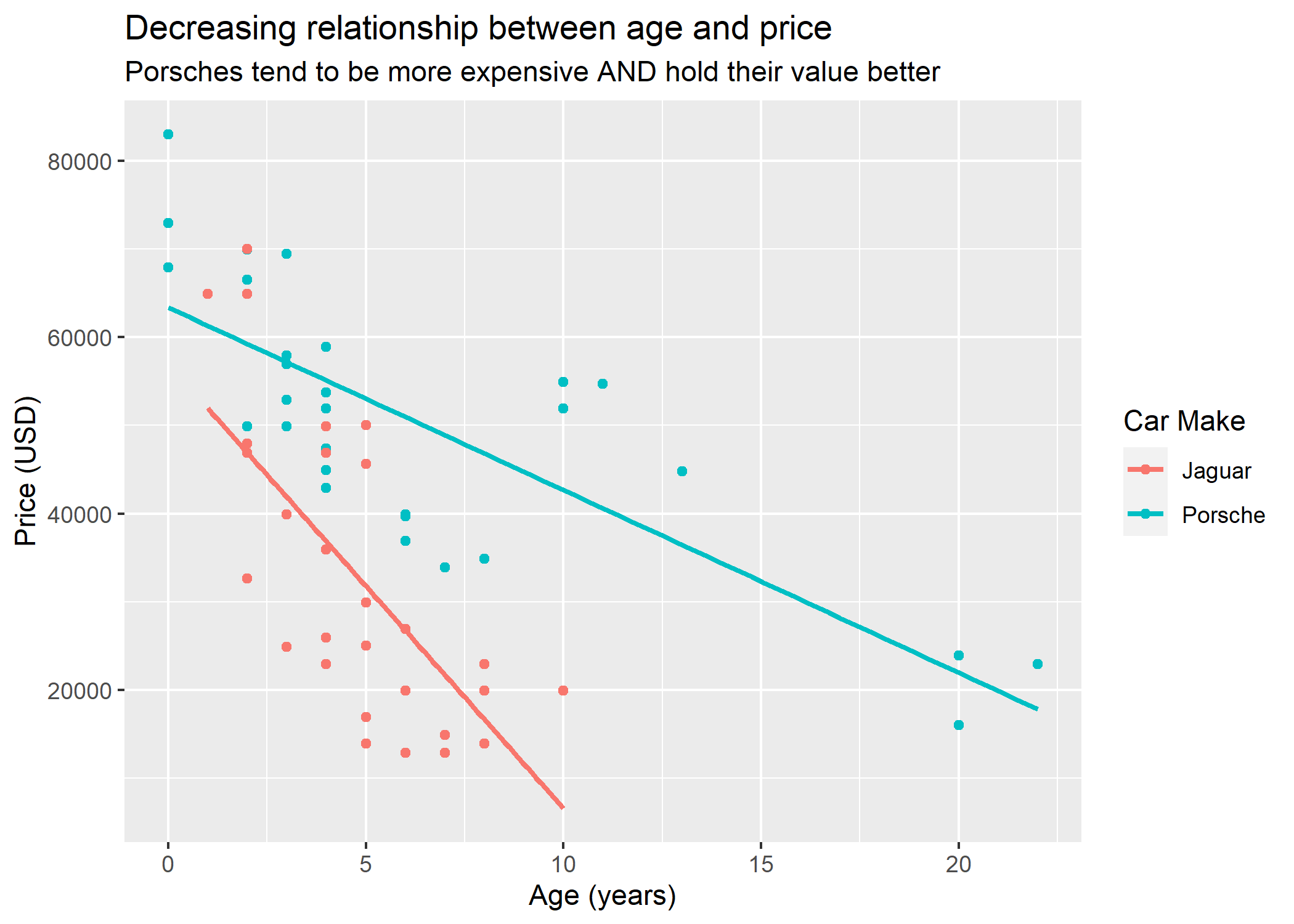

class: center, middle, inverse, title-slide # Interaction Terms ### Yue Jiang ### STA 210 / Duke University / Spring 2024 --- ### Review: Multiple regression The model is given by `\begin{align*} y_i = \beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + \cdots + \beta_px_{ip} + \epsilon_i \end{align*}` - `\(p\)` is the total number of predictor or explanatory variables - `\(y_i\)` is the outcome (dependent variable) of interest - `\(\beta_0\)` is the intercept parameter - `\(\beta_1, \beta_2, \cdots, \beta_p\)` are the slope parameters - `\(x_{i1}, x_{i2}, \cdots, x_{ip}\)` are predictor variables - `\(\epsilon_i\)` is the error (like the `\(\beta\)`s, it is not observed) --- ### Review: Vocabulary - .vocab[Response variable]: Variable whose behavior or variation you are trying to understand. - .vocab[Explanatory variables]: Other variables that you want to use to explain the variation in the response. - .vocab[Predicted value]: Output of the **model function** - The model function gives the typical value of the response variable *conditioning* on the explanatory variables. - .vocab[Residuals]: Shows how far each case is from its predicted value - **Residual = Observed value - Predicted value** --- ### Today's data <img src="img/porsche.png" width="100%" style="display: block; margin: auto;" /> --- ### Today's data ```r sports_car_prices <- read_csv("data/sportscars.csv") ``` The file `sportscars.csv` contains prices for Porsche and Jaguar cars for sale on cars.com. `car`: car make (Jaguar or Porsche) `price`: price in USD `age`: age of the car in years `mileage`: previous miles driven --- ### Price, age, and make <!-- --> --- ### Review: Interpreting model coefficents Consider the following model: `\begin{align*} Price_i = \beta_0 + \beta_1(Age_i) + \beta_2(I(Porsche)_i) + \epsilon_i \end{align*}` Or in terms of conditional expectations (think about how this relates to the interpretations!) below. Note that we're assuming the expected value of the error terms is 0. `\begin{align*} E(Price_i | Age_i, I(Porsche)_i) = \beta_0 + \beta_1(Age_i) + \beta_2(I(Porsche)_i) \end{align*}` .question[ - How would you interpret `\(\beta_0\)`, `\(\beta_1\)`, and `\(\beta_2\)` in the model above? ] --- ### Price, age, and make <!-- --> --- ### Modeling with main effects ```r m_main <- lm(price ~ age + car, data = sports_car_prices) summary(m_main) ``` ``` ## ## Call: ## lm(formula = price ~ age + car, data = sports_car_prices) ## ## Residuals: ## Min 1Q Median 3Q Max ## -17974 -10655 -1554 8653 30665 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 44309.8 2767.6 16.010 < 2e-16 *** ## age -2487.2 347.6 -7.155 1.75e-09 *** ## carPorsche 21647.6 3089.0 7.008 3.09e-09 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 11850 on 57 degrees of freedom ## Multiple R-squared: 0.6071, Adjusted R-squared: 0.5934 ## F-statistic: 44.04 on 2 and 57 DF, p-value: 2.728e-12 ``` $$ \widehat{price}_i = 44310 - 2487~age_i + 21648~I(Porsche)_i $$ - Jaguar: `$$\begin{align}\widehat{price} &= 44310 - 2487~age + 21648 \times 0\\ &= 44310 - 2487~age\\\end{align}$$` - Porsche: `$$\begin{align}\widehat{price} &= 44310 - 2487~age + 21648 \times 1\\ &= 65958 - 2487~age\\\end{align}$$` - Rate of change in price as the age of the car increases does not depend on make of car (.vocab[same slopes]) - Porsches are consistently more expensive than Jaguars (.vocab[different intercepts]) --- ### Modeling with main effects - New Jaguars are expected to have a price of $44,310. - Controlling for the make of a car (Porsche vs. Jaguar), for each additional year of a car's age, the price of the car is expected to be, on average, lower by $2,487. - Controlling for the age of a car, Porsches are expected to have a price that is $21,648 greater than Jaguars. --- ### What's different here? <!-- --> --- ### What's the difference here? .question[ What is the difference in the relationship(s) depicted by the left and right panels below? ] .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- ### Differences between the two models... - `I(Porsche)` is the only variable in our model that affects the intercept. - The model we specified assumes Jaguars and Porsches have the **same slope** and **different intercepts**. - What is the most appropriate model for these data? - same slope and intercept for Jaguars and Porsches? - same slope and different intercept for Jaguars and Porsches? - different slope and different intercept for Jaguars and Porsches? --- ### Continuous-by-categorical interactions Including an .vocab[interaction effect] between a continuous predictor and a categorical predictor in the model allows for different slopes, i.e. nonparallel lines. We can accomplish this by adding an .vocab[interaction variable]. This is the product of two explanatory variables. This means that the relationship between an explanatory variable (say, age) and the response (say, price) depends on the value of another explanatory variable. We see this in the right-side panel before. Jaguars depreciate much faster; the slope between age and price, for Jaguars, is steeper than that for Porsche. --- ### Price vs. age and car interacting <!-- --> --- ### Modeling with interaction effects ```r m_int <- lm(price ~ age + car + age * car, data = sports_car_prices) summary(m_int) ``` ``` ## ## Call: ## lm(formula = price ~ age + car + age * car, data = sports_car_prices) ## ## Residuals: ## Min 1Q Median 3Q Max ## -17888.7 -8940.0 -595.8 9305.2 23091.5 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 56988.4 4723.9 12.064 < 2e-16 *** ## age -5039.9 861.0 -5.853 2.63e-07 *** ## carPorsche 6386.6 5567.1 1.147 0.25617 ## age:carPorsche 2969.2 928.6 3.197 0.00228 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 10990 on 56 degrees of freedom ## Multiple R-squared: 0.6678, Adjusted R-squared: 0.65 ## F-statistic: 37.52 on 3 and 56 DF, p-value: 1.983e-13 ``` `$$\widehat{price} = 56988 - 5040~age + 6387~carPorsche + 2969~age \times carPorsche$$` --- ### Interpretation of interaction effects `$$\widehat{price}_i = 56988 - 5040~age_i + 6387~I(Porsche)_i + \\ 2969~age_i \times I(Porsche)_i$$` - Jaguar: `$$\begin{align}\widehat{price} &= 56988 - 5040~age_i + 6387 \times 0 + 2969~age_i \times 0\\ &= 56988 - 5040~age_i\end{align}$$` - Porsche: `$$\begin{align}\widehat{price} &= 56988 - 5040~age_i + 6387 \times 1 + 2969~age_i \times 1\\ &= 63375 - 2071~age_i\end{align}$$` .question[ How do you interpret these two models? ] --- ### Interpretation of interaction effects `$$\widehat{price}_i = \widehat{\beta}_0 + \widehat{\beta}_1~age_i + \widehat{\beta}_2~I(Porsche)_i + \widehat{\beta}_3~age_i \times I(Porsche)_i$$` .question[ - How do you interpret the coefficients in this model? - What is the predicted difference in price, given a one-year increase in age? (be careful here!) ] --- ### A generic interaction model `\begin{align*} y_i = \beta_0 + \beta_1 x_{i1} + \beta_2 x_{i2} + \beta_3 x_{i3} + \beta_4 x_{i1} \times x_{i2} + \epsilon_i \end{align*}` .question[ - What happens when `\(x_1\)` increases by 1 (while `\(x_2\)` and `\(x_3\)` remain constant)? - How about when `\(x_2\)` increases by 1 (while `\(x_1\)` and `\(x_3\)` remain constant)? - How about `\(x_3\)`? - With this in mind, how might you interpret the various `\(\beta\)` terms? ] Take-away: if there are interaction terms in your model, it's often quite a bit trickier interpreting main effects - ask yourself whether the main effects are even what you care about! --- ### Another model ``` ## ## Call: ## lm(formula = price ~ age + car + mileage + age * car, data = sports_car_prices) ## ## Residuals: ## Min 1Q Median 3Q Max ## -20744.6 -5132.3 671.6 5295.7 17763.1 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 57998.74 3913.75 14.819 < 2e-16 *** ## age -1669.76 965.20 -1.730 0.0892 . ## carPorsche 12020.21 4733.42 2.539 0.0140 * ## mileage -494.35 95.51 -5.176 3.3e-06 *** ## age:carPorsche 1307.98 832.74 1.571 0.1220 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 9095 on 55 degrees of freedom ## Multiple R-squared: 0.7766, Adjusted R-squared: 0.7604 ## F-statistic: 47.8 on 4 and 55 DF, p-value: < 2.2e-16 ``` --- ### Another model .question[ Is there sufficient evidence to suggest that the relationship between age and the price of a car depends on the make of the car (while adjusting for mileage)? How about evidence to suggest that the relationship between the make of the car and the price of a car depends on the age (while adjusting for mileage)? ] --- ### Another model ``` ## ## Call: ## lm(formula = price ~ age + car + mileage + age * mileage, data = sports_car_prices) ## ## Residuals: ## Min 1Q Median 3Q Max ## -20718.8 -5207.6 -914.5 7411.2 17505.0 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 59078.676 3566.572 16.565 < 2e-16 *** ## age -1846.530 800.448 -2.307 0.0249 * ## carPorsche 17594.454 2400.320 7.330 1.09e-09 *** ## mileage -634.913 93.644 -6.780 8.64e-09 *** ## age:mileage 22.496 9.961 2.258 0.0279 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 8894 on 55 degrees of freedom ## Multiple R-squared: 0.7864, Adjusted R-squared: 0.7709 ## F-statistic: 50.62 on 4 and 55 DF, p-value: < 2.2e-16 ``` --- ### Continuous by continuous interactions `\begin{align*} \widehat{price}_i = 59,078.7 - 1,846.5~age_i + 17,594.5~I(Porsche)_i -\\ 634,913.2~mileage_i + 22,496.0~age_i \times mileage_i \end{align*}` .question[ What is the predicted difference in price given each additional 1,000 miles driven on the car (while adjusting for make)? How about for each additional year old the car is (while adjusting for make)? What is the predicted difference in price between Porsches and Jaguars, while controlling for age and mileage? ...we've seen lots of models today. Which one "should" you use (if any)? ] --- ### Third order interactions - Can you? I mean, ... yes, I guess. - Should you? Probably not if you want to interpret these interactions in context of the data.