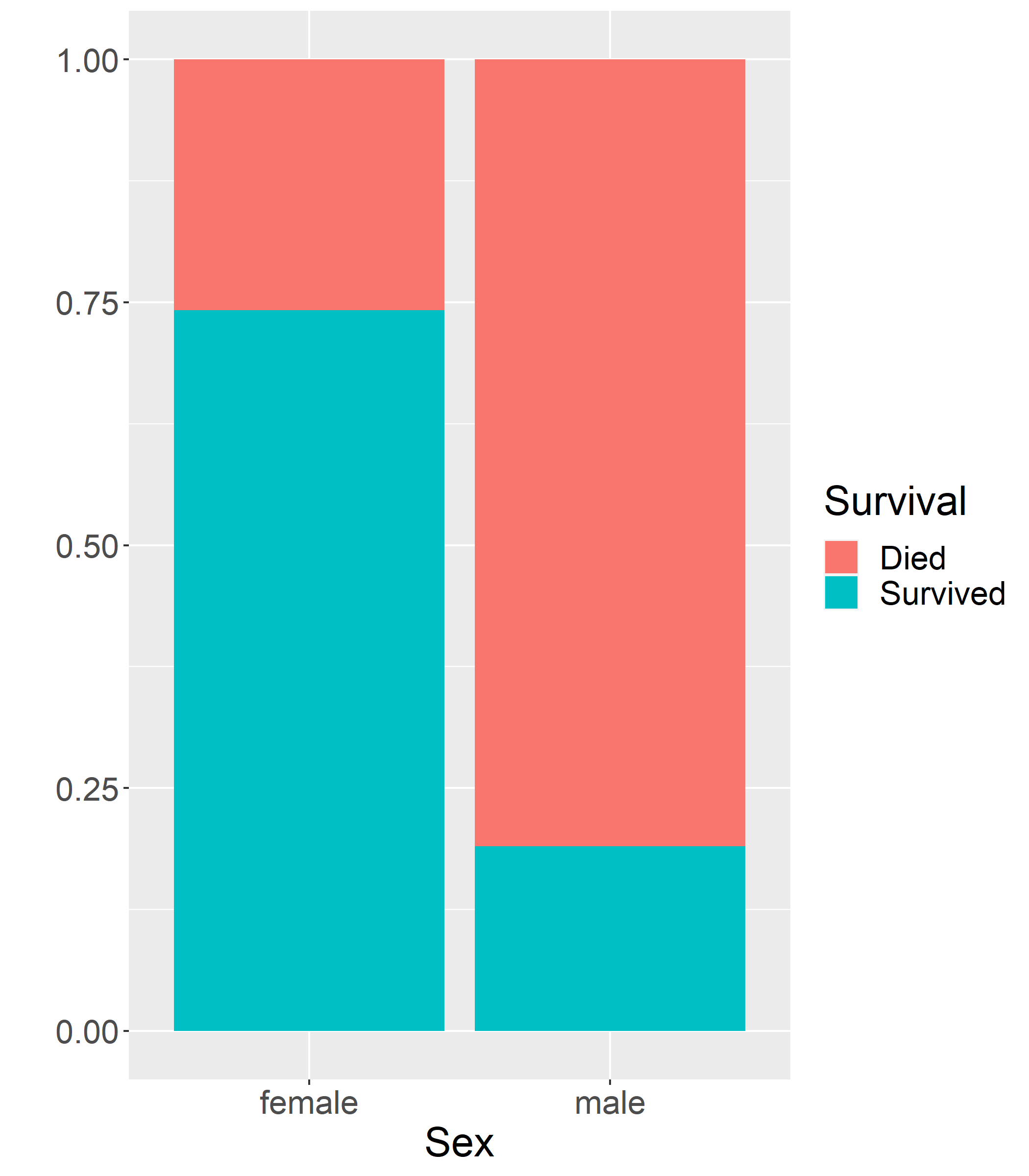

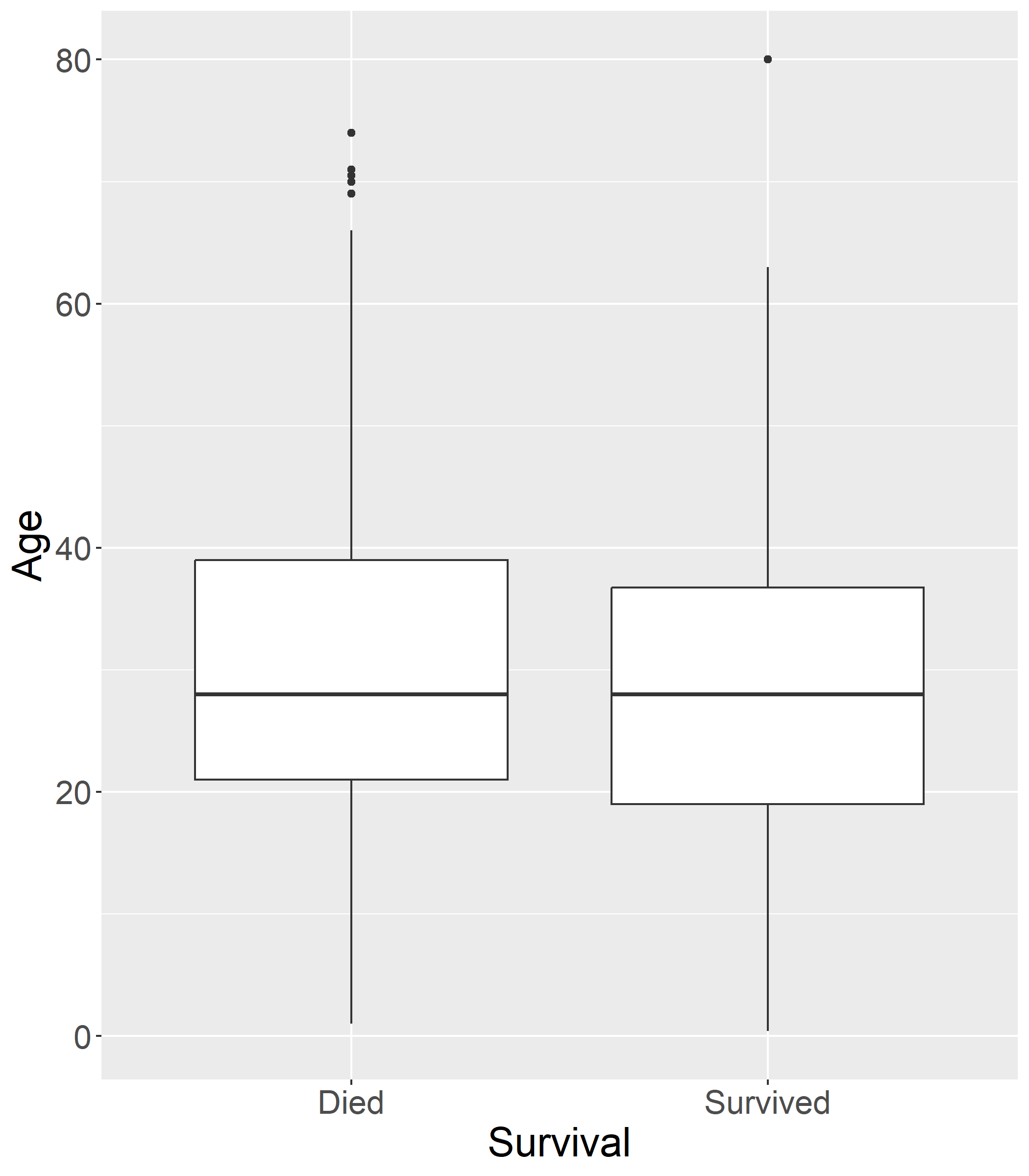

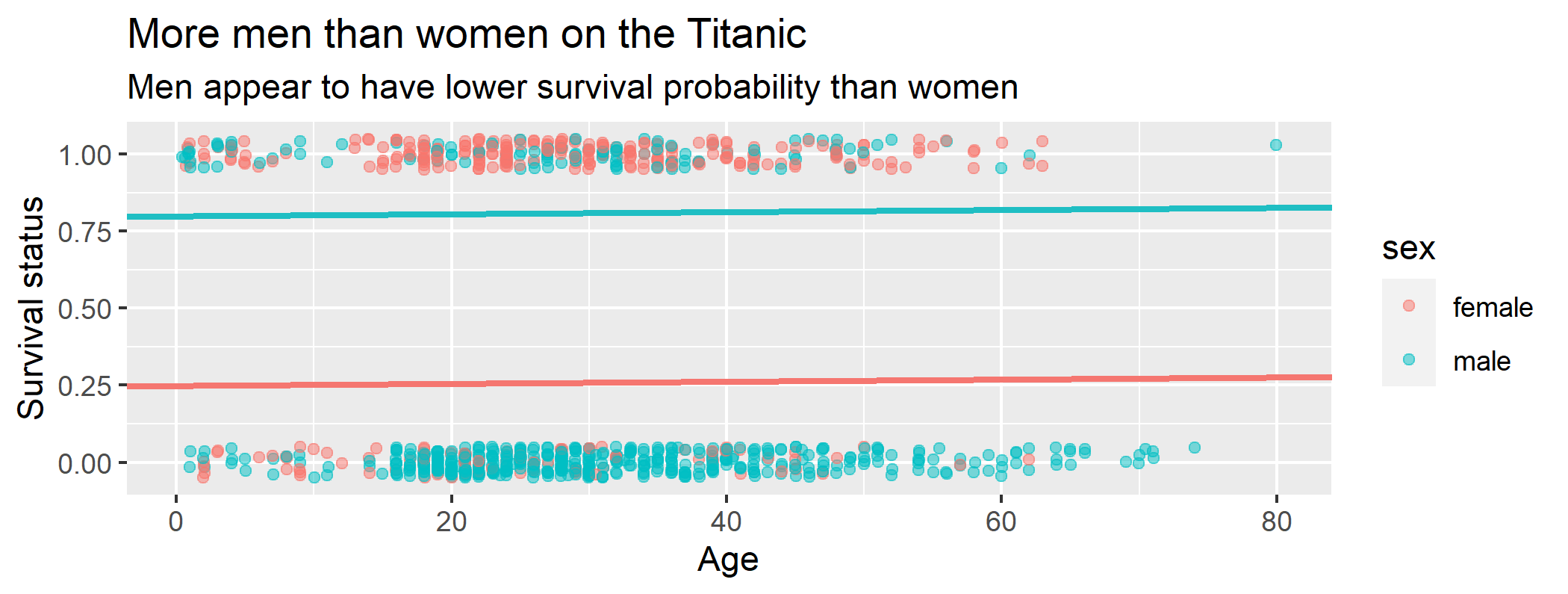

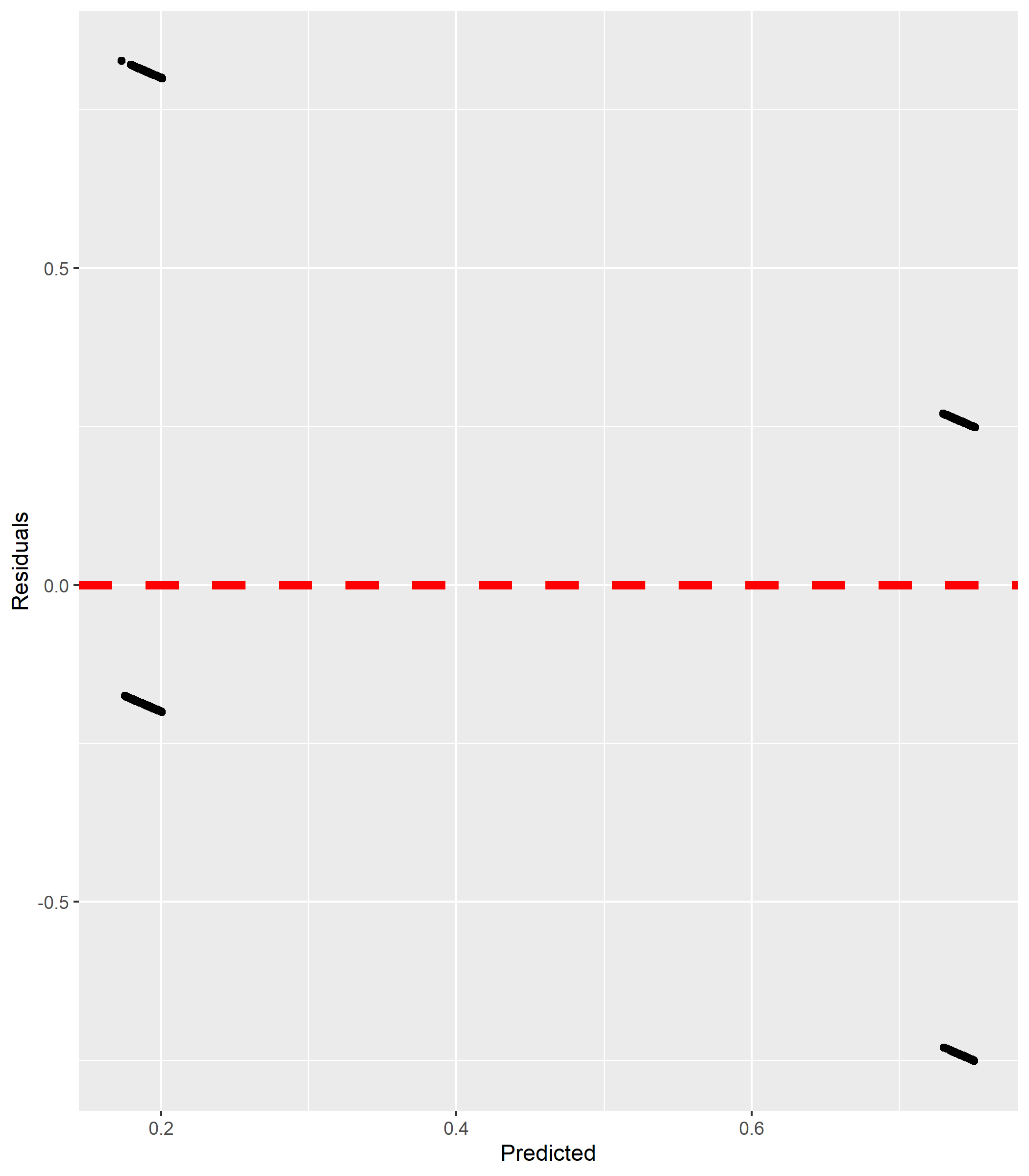

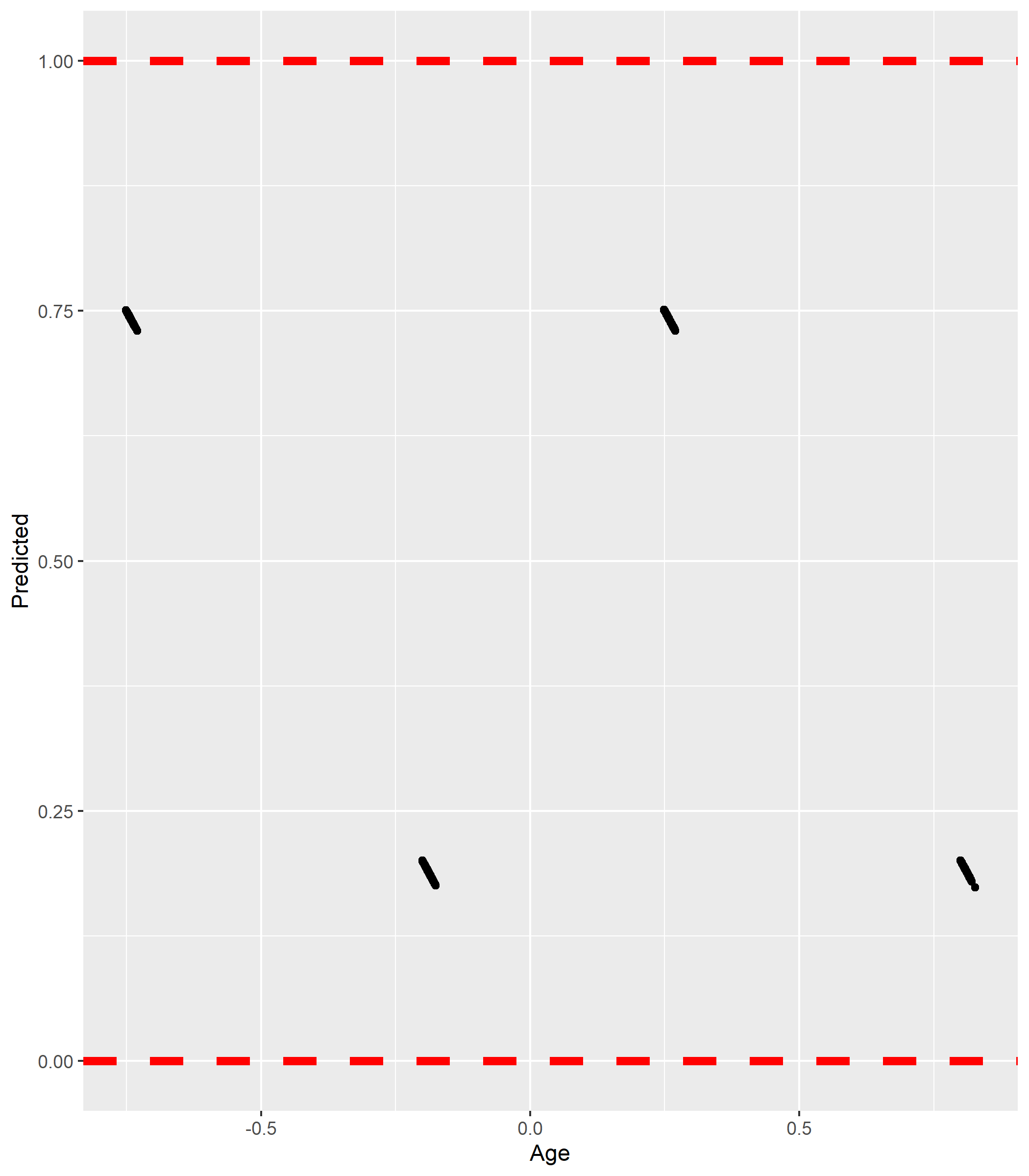

class: center, middle, inverse, title-slide # Logistic Regression ### Yue Jiang ### STA 210 / Duke University / Spring 2024 --- ### Project! --- ### Introduction Multiple regression allows us to relate a numerical response variable to one or more numerical or categorical predictors. We can use multiple regression models to understand relationships, assess differences, and make predictions. But what about a situation where the response of interest is categorical and binary? - spam or not spam - malignant or benign tumor - survived or died - admitted or denied --- ### April 15, 1912 On April 15, 1912 the famous ocean liner *Titanic* sank in the North Atlantic after striking an iceberg on its maiden voyage. The dataset `titanic.csv` contains the survival status and other attributes of individuals on the titanic. - `survived`: survival status (0 = died, 1 = survived) - `pclass`: passenger class (1 = 1st, 2 = 2nd, 3 = 3rd) - `name`: name of individual - `sex`: sex (male or female) - `age`: age in years - `fare`: passenger fare in British pounds We are interested in investigating the variables that contribute to passenger survival. Do women and children really come first? --- ### Data and packages ```r library(tidyverse) library(broom) ``` ```r titanic <- read_csv("data/titanic.csv") ``` .tiny[ ```r glimpse(titanic) ``` ``` ## Rows: 887 ## Columns: 6 ## $ pclass <dbl> 3, 1, 3, 1, 3, 3, 1, 3, 3, 2, 3, 1, 3, 3, 3, 2, 3, 2, 3, 3, 2~ ## $ name <chr> "Mr. Owen Harris Braund", "Mrs. John Bradley (Florence Briggs~ ## $ sex <chr> "male", "female", "female", "female", "male", "male", "male",~ ## $ age <dbl> 22, 38, 26, 35, 35, 27, 54, 2, 27, 14, 4, 58, 20, 39, 14, 55,~ ## $ fare <dbl> 7.2500, 71.2833, 7.9250, 53.1000, 8.0500, 8.4583, 51.8625, 21~ ## $ survived <dbl> 0, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0~ ``` ] --- ### Exploratory Data Analysis .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- ### The linear model with multiple predictors - Population model: `\begin{align*} y_i = \beta_0 + \beta_1~x_{i1} + \beta_2~x_{i2} + \cdots + \beta_p~x_{ip} + \epsilon_i \end{align*}` Denote by `\(p\)` the probability of death and consider the model below. `\begin{align*} p_i = \beta_0 + \beta_1~x_{i1} + \beta_2~x_{i2} + \cdots + \beta_p~x_{ip} + \epsilon_i \end{align*}` .question[ Can you see any problems with this approach? ] --- ### Linear regression? ```r lm_survival <- lm(survived ~ age + sex, data = titanic) tidy(lm_survival) ``` ``` ## # A tibble: 3 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) 0.752 0.0356 21.1 2.88e-80 ## 2 age -0.000343 0.000979 -0.350 7.26e- 1 ## 3 sexmale -0.551 0.0289 -19.1 3.50e-68 ``` --- ### Visualizing the model <!-- --> --- ### Diagnostics .pull-left[ <!-- --> ] .pull-right[ <!-- --> ] --- ### Preliminaries - Denote by `\(p\)` the probability of some event - The .vocab[odds] the event occurs is `\(\frac{p}{1-p}\)` Odds are sometimes expressed as X : Y and read X to Y. It is the ratio of successes to failures, where values larger than 1 favor a success and values smaller than 1 favor a failure. .question[ If `\(P(A) = 1/2\)`, what are the odds of `\(A\)`? ] .question[ If `\(P(B) = 1/3\)` what are the odds of `\(B\)`? ] An .vocab[odds ratio] is a ratio of odds. --- ### Preliminaries - Taking the natural log of the odds yields the .vocab[logit] of `\(p\)` `$$\text{logit}(p) = \text{log}\left(\frac{p}{1-p}\right)$$` The logit takes a value of `\(p\)` between 0 and 1 and outputs a value between `\(-\infty\)` and `\(\infty\)`. The inverse logit (logistic) takes a value between `\(-\infty\)` and `\(\infty\)` and outputs a value between 0 and 1. `$$\text{inverse logit}(x) = \frac{e^x}{1+e^x} = \frac{1}{1 + e^{-x}}$$` There is a one-to-one relationship between probabilities and log-odds. If we create a model using the log-odds we can "work backwards" using the logistic function to obtain probabilities between 0 and 1. --- ### Logistic Regression model `$$\text{log}\left(\frac{p_i}{1-p_i}\right) = \beta_0 + \beta_1 x_{i1} + \beta_2 x_{i2} + \cdots + \beta_p x_{ip}$$` Use the inverse logit to find the expression for `\(p\)`. `$$p_i = \frac{e^{\beta_0 + \beta_1 x_{i1} + \beta_2 x_{i2} + \cdots + \beta_p x_{ip}}}{1 + e^{\beta_0 + \beta_1 x_{i1} + \beta_2 x_{i2} + \cdots + \beta_p x_{ip}}}$$` We can use the logistic regression model to obtain predicted probabilities of success for a binary response variable. --- ### Logistic Regression model We handle fitting the model via computer using the `glm` function. ```r logit_mod <- glm(survived ~ sex + age, data = titanic, family = "binomial" ) tidy(logit_mod) ``` ``` ## # A tibble: 3 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) 1.11 0.208 5.34 9.05e- 8 ## 2 sexmale -2.50 0.168 -14.9 3.24e-50 ## 3 age -0.00206 0.00586 -0.351 7.25e- 1 ``` And use `augment` to find predicted log-odds. ```r pred_log_odds <- augment(logit_mod) ``` --- ### The Titanic data .tiny[ ```r tidy(logit_mod) ``` ``` ## # A tibble: 3 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) 1.11 0.208 5.34 9.05e- 8 ## 2 sexmale -2.50 0.168 -14.9 3.24e-50 ## 3 age -0.00206 0.00586 -0.351 7.25e- 1 ``` ] `$$\widehat{\text{logit}(p)} = 1.11 - 2.50~sex - 0.00206~age$$` `$$\hat{p} = \frac{e^{1.11 - 2.50~sex - 0.00206~age}}{{1+e^{1.11 - 2.50~sex - 0.00206~age}}}$$` --- ### Interpreting coefficients `$$\widehat{\text{logit}(p)} = 1.11 - 2.50~sex - 0.00206~age$$` Holding sex constant, for every additional year of age, we expect the log-odds of survival to decrease by approximately 0.002. Holding age constant, we expect males to have a log-odds of survival that is 2.50 less than females. --- ### Interpreting coefficients `$$\widehat{\text{odds}} = e^{1.11 - 2.50~sex - 0.00206~age}$$` Holding sex constant, for every one year increase in age, the odds of survival is *predicted* to be multipled by `\(e^{-0.00206} = 0.998\)`. That is, in comparing two individuals with the same sex, where one is one year older than the other, we predict that that individual has approximately 0.998 times the odds of survival compared to the younger individual. Holding age constant, the odds of survival for males is estimated to be `\(e^{-2.50} = 0.082\)` times the odds of survival for females. --- ### Classification Logistic regression allows us to obtain predicted probabilities of success for a binary variable. By imposing a threshold (for example if the probability is greater than `\(0.50\)`) we can create a classifier. - Logistic regression has assumptions: independence and linearity in the log-odds (some other methods require fewer assumptions) - Straightforward interpretation of coefficients - Handles numerical and categorical predictors - Can quantify uncertainty around a prediction --- ### Inference in the logistic regression model ```r logit_mod <- glm(survived ~ sex + age, data = titanic, family = "binomial" ) tidy(logit_mod) ``` ``` ## # A tibble: 3 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) 1.11 0.208 5.34 9.05e- 8 ## 2 sexmale -2.50 0.168 -14.9 3.24e-50 ## 3 age -0.00206 0.00586 -0.351 7.25e- 1 ``` A **z-statistic** (standard normal) is used here: `\begin{align*} z = \frac{\widehat{\beta}_k - \beta_{k, 0}}{SE(\widehat{\beta})k} \end{align*}` where `\(z\)` has a standard normal distribution under `\(H_0\)`. --- ### Confidence intervals A 95% confidence interval for the log-odds is given by `\begin{align*} \widehat{\beta}_k \pm z^\star SE(\widehat{\beta}_k) \end{align*}` Similarly, a 95% confidence interval for odds is given by `\begin{align*} e^{\widehat{\beta}_k \pm z^\star SE(\widehat{\beta}_k)} \end{align*}` .question[ How might we interpret these quantities? What is `\(z^\star\)`? ]